I’ve argued there isn’t much difference, even that much of the detail is invented, a kind of advanced sharpening. Let’s move beyond theory.

Let’s have a look at the difference between DeepPrime and DeepPrime XD. First the settings:

Here’s what the image itself looks like (this is the Neural Engine DeepPrime XD version):

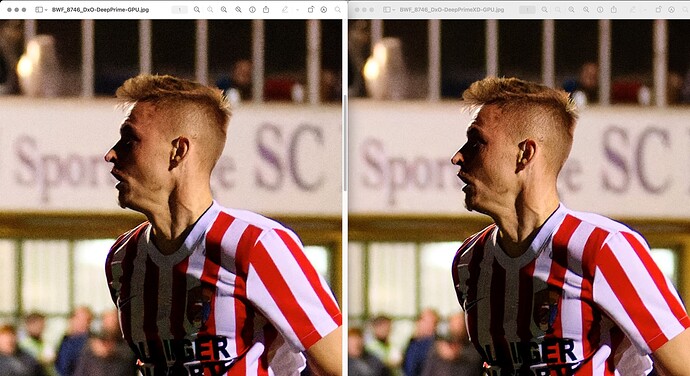

Now the screenshot of the difference at 100% with DeepPrime set at 20.

There is more perceptible detail in the DeepPrime XD image. In the DeepPrime XD image, the player looks like he has facial scars running along his cheekbones. This shot is in Austria and not in Mexico or El Salvador. The player does not have facial scars. At anything less than 100% the difference the difference is imperceptible. That said, this experiment has convinced me to do some further experimentation with DeepPrime XD with my own photography. DeepPrime XD does not look substantially worse and the additional sharpening adds some welcome grit for sports photography. I’ll see about artefacts.

That said, I cannot imagine that the extra sharpening/invented texture would be make or break for a photograph. It’s how many angels dancing on the head of the pin, kind of difference that only other photographers would care about.

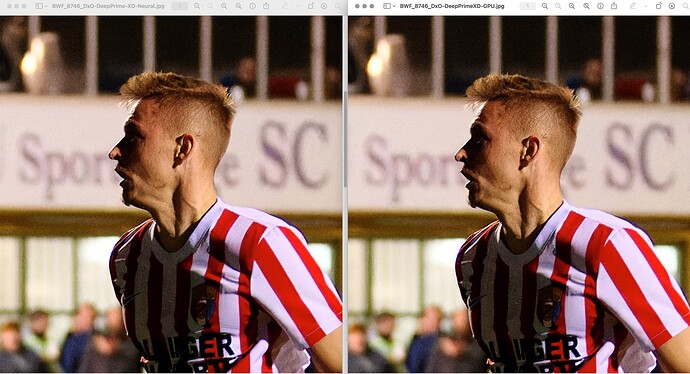

Just for fun here’s a comparison between DeepPrime XD with Neural Engine processing and GPU Processing.

They appear identical to me, so nothing is lost by using the GPU engine except for a few seconds.

Export times, both Neural Engine and GPU

I often do exports of reasonably large sets (60 images) where I do multiple exports so quick export is important to me. Here’s what the export times for this D850 image are. GPU is M1 Max with 32 cores.

| Kind | Time in seconds |

|---|---|

| DeepPrime Neural Engine | 8 |

| DeepPrime GPU | 10 |

| DeepPrime XD Neural Engine | 20 |

| DeepPrime XD GPU | 26 |

Clearly there’s nothing wrong with GPU processing. The time penalty for DeepPrime XD is 2.5, whether with Neural Engine or GPU.

M1 Pro times should be about double that, perhaps a little less (processing doesn’t always scale out at 100%). I don’t have an M1 Pro to test. Even at double those times, DeepPrime XD GPU export is perfectly viable. We used to deal with those export times for plain old Prime with most graphic cards.

Reference

Here are the remaining output files as well as the NEF for anyone who wants to test speed on their own system.

DeepPrime Neural

DeepPrime GPU

DeepPrime XD GPU

Conclusion

There are so many ways to solve this issue of DeepPrime XD color cast on Ventura, it’s really up to the photographer to find a solution which suits his/her requirements and budget.

- Backgrade to Monterrey (works great)

- Use GPU processing (should acquire at least an M1 Pro)

- Use DeepPrime (also superb) until the issue is solved

As anyone who works closely with Apple knows, railing at DxO for deep Apple bugs is howling at the wind, futile and childish.