I’ve decided to check out PL7 Elite Version 7.1.0 since I skipped PL6 Elite a year ago due to some very slow processing of my Pentax K-3 MK III files. After loading PL7 and running a couple of my Pentax K-3 MK III images through my usual PL5 Elite routines, PL7 seems to process my my images close to 50% or more faster than PL5. Has anyone else experienced this improved behavior?

Are you referring to faster processing when exporting images with DeepPRIME or DeepPRIME XD? What operating system are you using? What graphics card are you using? Can you give us the approximate export times you are comparing between PL6 and PL7? If you’re using a Mac what are you using for acceleration for acceleration?

In general PL7 processing time is similar to PL6. Some people have noticed slightly faster processing times and others have noticed much slower processing times. Much of this is probably due to the differences between individual hardware and software configurations. The very significantly improved performance you are seeing was not a result of an enhancement in PL 7. More than likely PL6 processing acceleration was being held back by some factor n your hardware configuration.

Mark

you can run a proper test on your computer with dozens of your raw files, instead of a couple …

My Bad, the improvement I was seeing was actually very insignificant. The actual improvement was in the performance of deep prime, which was about 10 seconds better than PL5. Realizing that, I still might upgrade if the Black Friday discount is substantial.

Larry

Understand that the extent you edit an image can also impact processing time by several seconds, depending on the number and type of edits. Faster processing may also be a result of updated drivers. It’s been a couple of months since I have had access to PL 5 on my machine, but I don’t recall PL 5 DeepPRIME processing, in general, to be significantly slower than in PL 6 or PL 7 on Windows 10.

Mark

On my machine PL7 DeepPrime seems to be faster than PL5, but as you said that depends on the amount of your edits you are doing. So I’m thinking seriously about buying PL7 as my last editing software and only use DeepPrimeXD for special edits.

To test export times properly, select a folder containing e.g. 60 files of various qualities (file size, iso setting, flat/contrasty etc.) , save the sidecars in DPL 5. Then, open DPL 7, import the sidecars you just saved and export all files again.

I ran such tests under several conditions like e.g. the number of files processed in parallel and found that any change will influence export times. It is therefore important to set all things equally, or the results will be irrelevant.

Important: Exporting will take longer on a first run, therefore always run the same export twice to make sure to compare tests fairly.

Nevertheless, I’d not consider export performance to be the main differentiator between DPL versions 5 and 7.

Why? Surely it is the same code processing the same bits? How can doing that take less time on a second run?

Caching in memory

OK but surely no one processes an image twice, just to get a faster time on the second export?

So I don’t see that this is relevant when it comes to estimating export times.

I think it was mostly intended as an unexpected observation.

Mark.

His statement only refers to obtaining accurate metrics for comparison testing purposes not for general processing.

Mark

I must be missing something then because, to me, an accurate metric for export time would be the time it takes to do an export the first time.

Not necessarily. I often export the same image multiple times during its development in a single session to add additional refinements or to create multiple versions. It is not uncommon for me to do that. But even for those who don’t, it is not clear whether this longer export time is specific to every image exported for the first time during a session or just the first export in a session regardless of which image is being used. In any case if this is really an issue, it is a Mac only one. It does not occur in the Windows version.

What is true in the Windows version is that the total time for the export of a single image is greater than the export time per image when batch exporting. That is because the export process startup overhead is not redone for every image when batch processing, just the first image. The difference in the export time per image in batch mode on my circa 2016 i7 PC with 24GB ram and a GTX 1050ti graphics card is around 2-3 seconds per image faster than when exporting single images.

Mark

In other words, on average, 50% of the time the export is below the average time, while 50% of the time it is above average ![]()

Normally not, but we are talking about test and measurement here. So, if by chance, images have been exported before, caching will speed up things and give a wrong impression if we compare results against those of an app that runs a test for the first time.

You could trash the db and cache files before each test for worst case measurements, but not everyone wants to do that.

If you want to estimate export times, anything goes, but for comparing on a common basis, the basis must be established first. ![]()

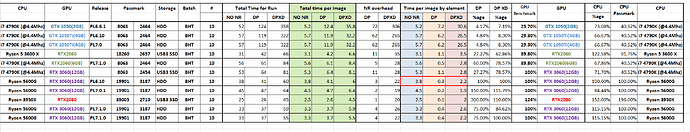

While these tests do not conform to @platypus “rule” about using 60 images they are slightly more that just 1. and all the tests on all the systems were using just 2 copies i.e.

The tests were conducted to see what £120 + postage had bought me with a second-hand RTX 2060 purchase from E-bay.

This will replace my GTX 1050Ti in an i7-4790K with 32GB memory.

I bought an RTX 3060 earlier in the year and then decided to upgrade the CPU which meant a new motherboard, new memory and new CPU, costing as much as the GPU upgrade had cost, i.e. about £330. However, most of my software licences are on the i7 so I have continued to use that as my main machine!

Prior to buying the 3060 I had conducted some tests on my Son’s and Grandson’s machines and they are included in the following spreadsheet

The batch size is 10 of my Lumix G9 RW2 images run through the following hardware

- i7 4790K with GTX 1050(2GB)

- i7 4790K with GTX 1050Ti(4GB)

- Ryzen 5 3600X with RTX 2060 (my Grandson’s machine, mostly used for gaming)

- Ryzen 5600G with RTX 3060 (12GB) (my “new” machine)

- Ryzen 3950X with RTX 2080 (my Son’s machine, mostly used for architectural modelling)

- i7 4790K with RTX 3060 (benchmarks that I did before building the new system)

- i7 4790K with RTX 2060(6GB) (my old machine i7 but now with the Ebay 2060)

The tests are ‘No NR’ which are edits only (no applied Noise Reduction), ‘DP’ and ‘DP XD’ which are runs with DeepPrime and DeepPrime noise reduction applied. While not entirely valid I take the ‘No NR’ times away from the ‘DP’ and ‘DP XD’ times to give figures for the time taken to process the image by the GPU to apply the Noise Reduction algorithms (there is some CPU involvement but it is impossible to determine).

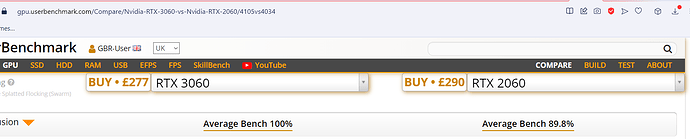

The CPU processing “power” figures I use are the Passmark and the GPU figures come from the GPU benchmarking site which is comparing GPUs, via gaming benchmarks’ for users intending to use their GPUs for that purpose e.g.

The baseline against which all the figures are compared in the table, to give the %ages, are for the 5600G with the 3060 running PL6.10.

They seem to show that the GTX 1050Ti bears no resemblance to the comparison from the site for gaming purposes, while the RTX cards “seem” to track their gaming credentials more accurately!

Arguably the RTX 2060 was superseded by the RTX 3060 and that has now been replaced by the RTX 4060, with the power requirements decreasing on each iteration. However, both the 2060 and the 3060 run fine on my Seasonic 550W Platinum power supplies.

When I selected images for testing purposes, I got a set of roughly 60 files and added a few so I got 60 files exactly, which provides an easy way to calculate time/image.

Test images should include files from all combinations of gear, exposure, lighting etc. for testing customizing features primarily, that I got 60 files is a colateral benefit.

Not necessarily. That’s the median time.