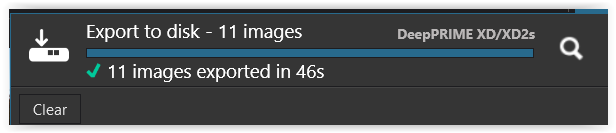

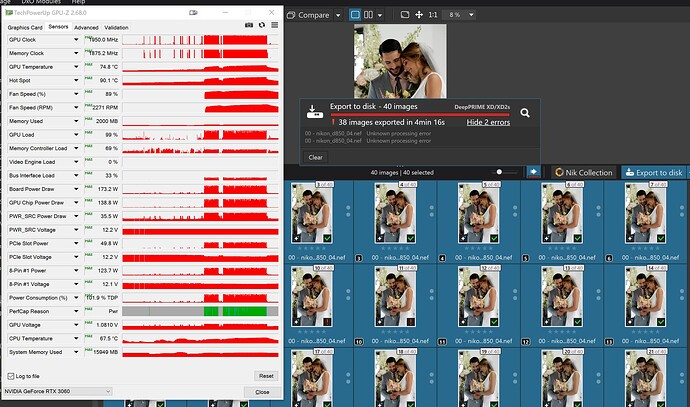

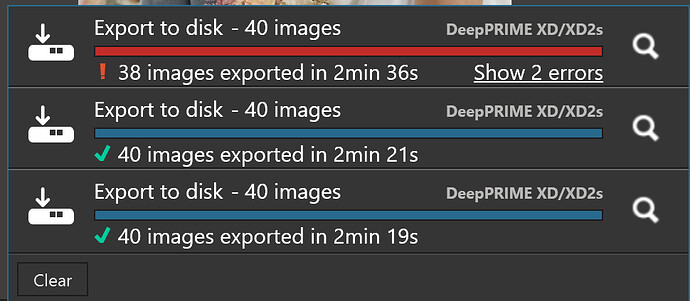

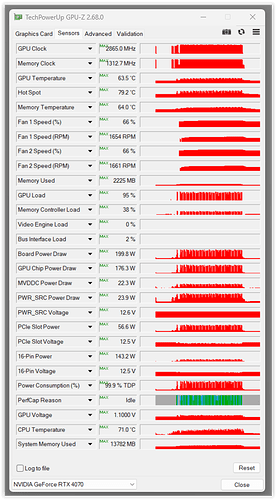

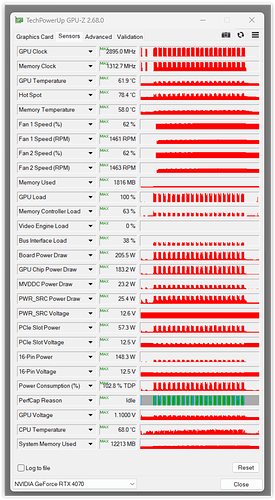

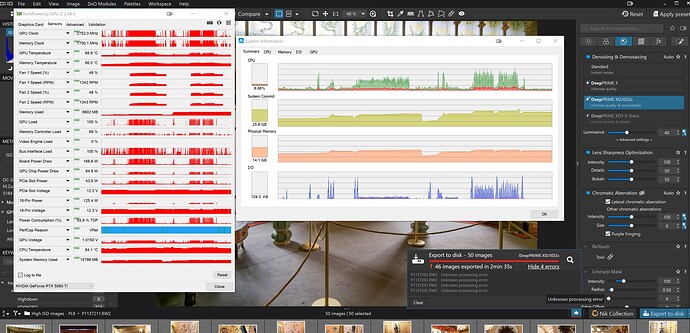

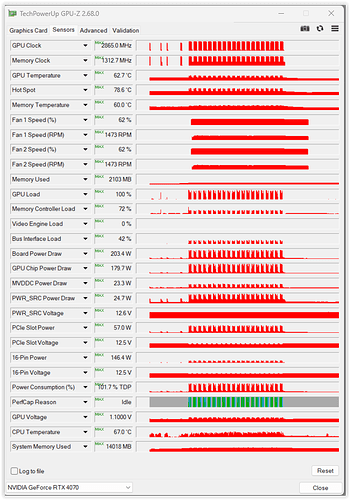

@Wolfgang I don’t believe that GPU-Z running has any impact so we have the results of three runs on the “Wedding” image

First run is the one I reported earlier, then the machine was in Sleep for many hours, then the second run which started with GPU-Z running, which I quickly terminated, HWinfo was left running throughout that test and no failure.

For the last run, HWinfo was terminated and GPU-Z ran throughout the export and no problem was encountered. The same export worker was in use throughout all the runs, but the long sleep would have flushed the VRAM.

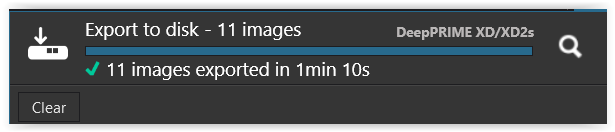

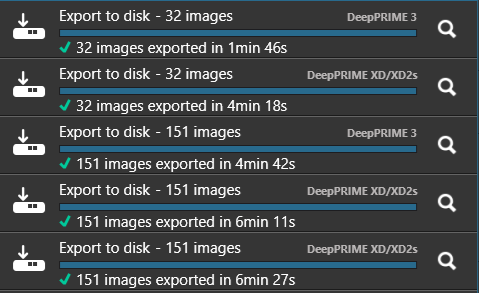

The point is that these are non AI images that work under PL8 and PL9, except they don’t work under PL9 sometimes!?

My other tests are completely NO AI and NO NR and the images are a mixed group of X-Trans images. They fail predictably at roughly the same image or, more likely at an image at the same point in the list.

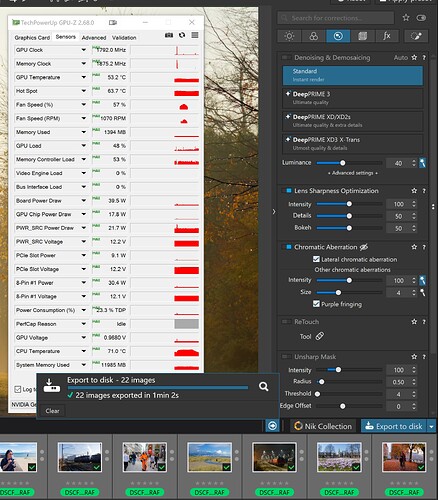

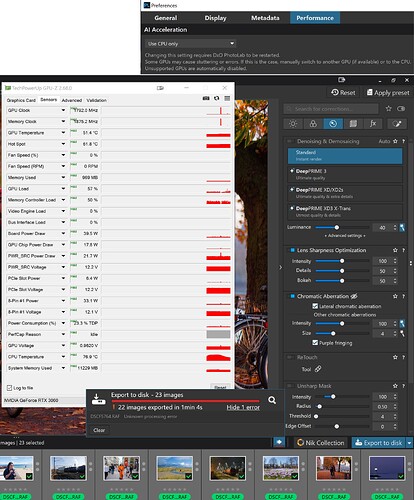

So I repeated the test on those images on the 3060(12GB) with a number of changes

- The list of images was reduced from 30 to 22 which included but stopped at the failing image and tested

- Another image was added to create 23 images and tested

- I repeated step 2 but with ‘CPU only’ selected and PL9.2 restarted

These test were on the 3060(12GB)

With 22 images in the List :-

It worked and no longer failed on the same image!?

So I dragged and dropped the 23rd image from the old directory to the new one and

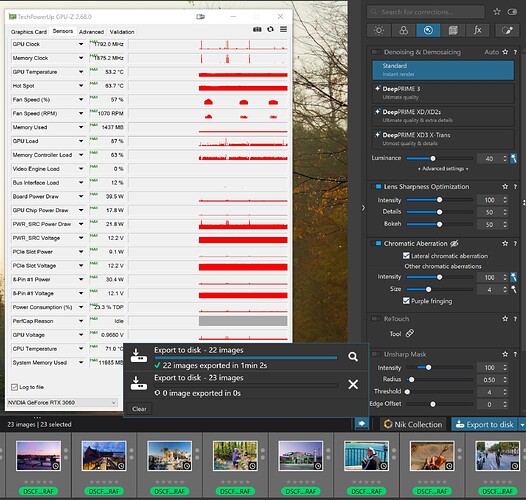

With 23 images PL9.02 “spun its wheels” on the first image:-

Firstly, the first 2 attempts after the drag and drop resulted in the export process simply getting stuck on the first image so I terminated the "Export Worker.

With 23 images in the List :-

On the third attempt (after terminating the export worker) success or rather the failure that I expected, i.e. image 22 and 23 both failed!

With 23 images in the list and ‘CPU only’ selected:-

This test also failed on the expected image but with a new twist.

The twist was that if I did the test with the GPU selected both images got an error, Image 22 and Image 23 but with ‘CPU - only’ selected only image 22 failed and image 23 was exported successfully!?

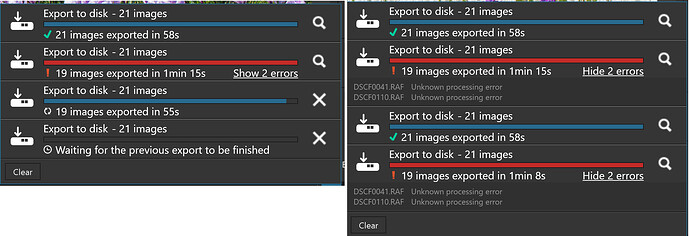

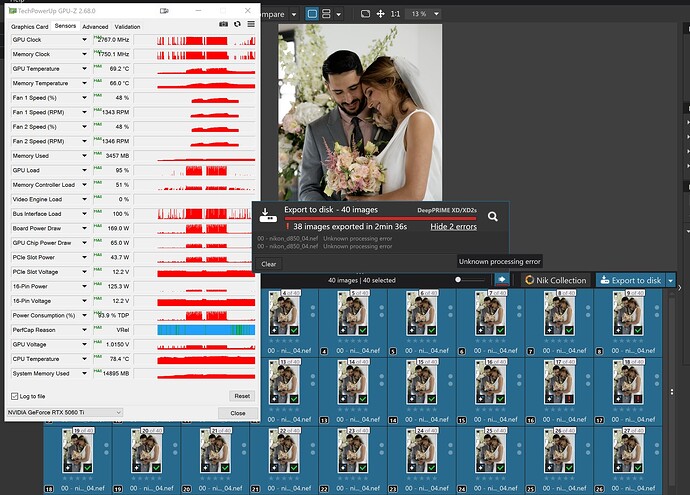

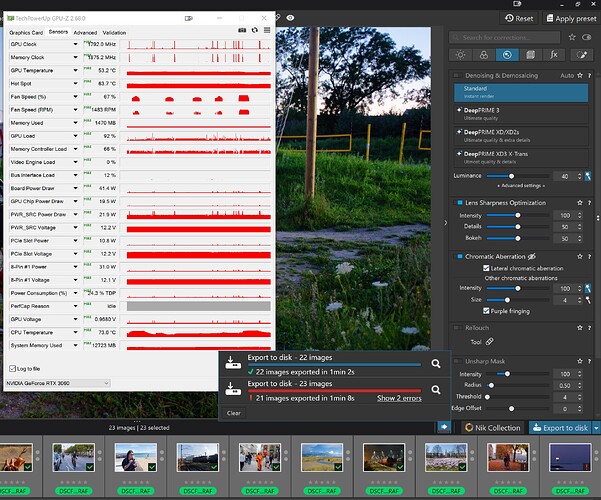

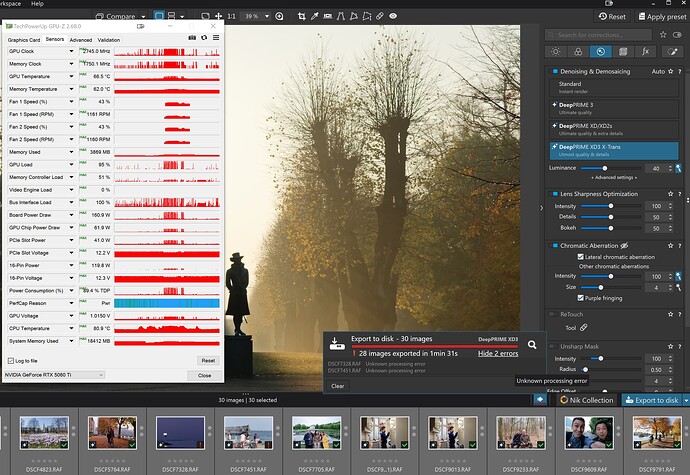

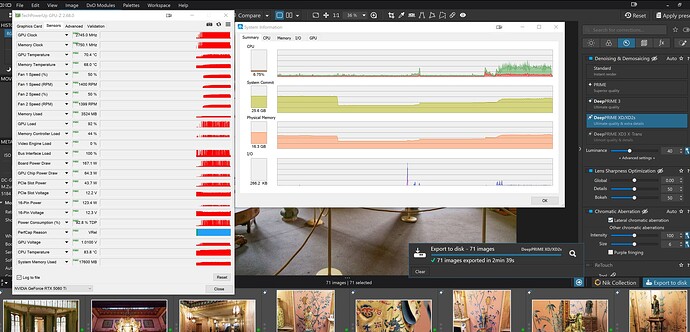

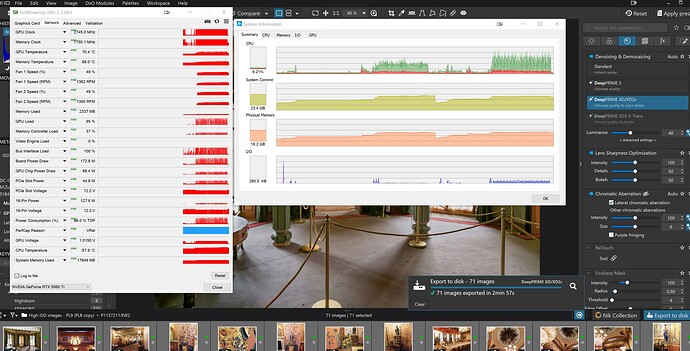

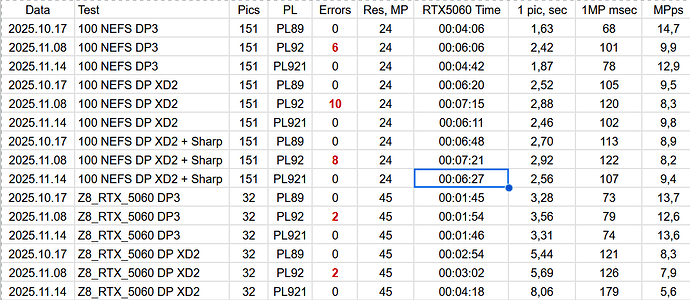

Since this topic is about the 5060 or 5060Ti I decided I ought to retest on the 5606Ti(16GB).

The first test was a mistake because I had left the XD3 active and the test failed on image 23, the one after the image that consistently fails on the the 3060(12GB) tests but those have NO NR

The second test was on all 30 images with no NR and that succeeded, when it has failed consistently on the 3060(12GB)!?

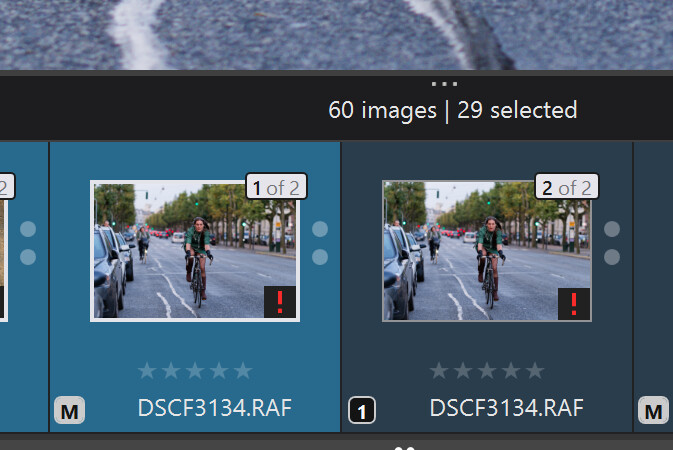

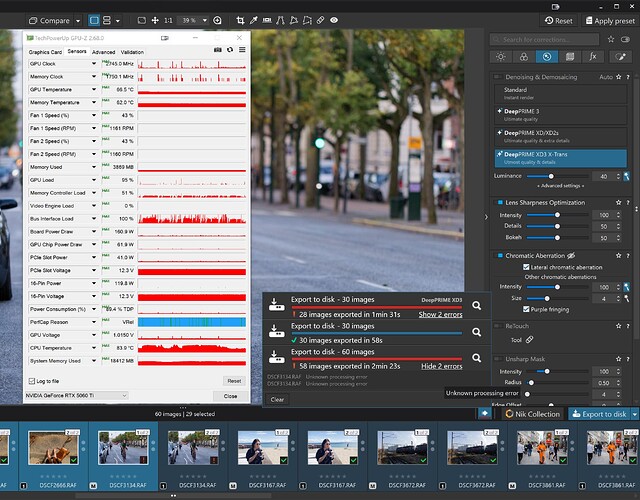

So I selected all images and created VCs giving a directory of 60 images and reran the test and it failed on image 29 (another lady on a bike image!!??)

So we have

Why the 5900X with 5060Ti(16GB) did not fail on image 22 with NO NR I do not know because the GPU should be irrelevant in NO AI and NO NR tests.