30 years ago, I loved the challenge of putting together a new PC. 20 years ago, I enjoyed choosing one off-the-shelf, installing and setting up all my software. Today I run from all that stuff like it’s the plague; I just want to get back to working on a photo. But I know I can’t avoid the issue forever; I want better noise reduction and I can’t get it on my existing system. I’ve been gone for a couple of weeks, and my trial of DxO PL8 ran out, so now I’d have to invest in a license just to experiment. Confound it!

If you are replacing your computer and have an alternative email address I suspect downloading and installing the trial on the new computer will give you another 30 days. I don’t think it would make any difference but I would download the trial again rather then use the copy you have already downloaded.

Mark

@jimh Sorry I didn’t respond sooner but yesterday we were making a visit to help our oldest son trying to put the finishing touches to the house he has been renovating including the 110 mile round trip.

I am “afraid” that I still “enjoy” putting my own machine together, but the “fettling” of the software less so when you get so many “wrong” or at least not completely correct answers to the latest problem you encounter on the internet!?

If you have been caught out by the GPU memory restrictions or the apparent decline in speed with the older GTX cards in particular then I am sorry abut that both of which are unnecessary, in my opinion.

- The 1 GB rule is being applied to 2 GB cards, why!?

- The slowness of the DP XD2s with older cards could have been avoided/mitigated by leaving DP XD in place, which has been done for Xtrans images, that would allow users to choose between XD or occasionally XD2s while being able to benefit from the other PL8 features.

If your machine is a desktop system then swapping to a more modern GPU, even a second hand purchase, as both my RTX 2060s are, is relatively straightforward.

But you do need the necessary GPU power connector for the GPU and, possibly, some additional power from the power supply. My existing 550W Seasonic Platinum power supply handled the RTX 3060 and the 5900X but when I was unable to source another 550W version I managed to acquire a 650W version and that is now coupled with the 5900X.

The good news is that the higher you go up the “food chain” the lower the power requirements are i.e. 2060 needs more power than the 3060, which needs more than the 4060, however, a jump to the 4070 does need more power.

If you are using a laptop then I am sorry but the only route is a new laptop.

While a more powerful CPU is always useful, except with respect to the electricity bills, it isn’t essential while a good GPU is essential if you want to export images without waiting “forever”.

So a short while ago I conducted tests on my 5900X and the redundant i7 4790K with the RTX 2060 and RTX 3060 and PL7 versus PL8 but will publish those in a separate topic.

@mwsilvers I don’t think another download is required but a machine that has never had PL8 installed is! The installation leaves a “marker” so a version of the O/S disk prior to any such installation can be used but not one that has had the installation removed.

Regards

Bryan

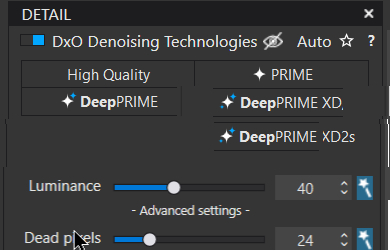

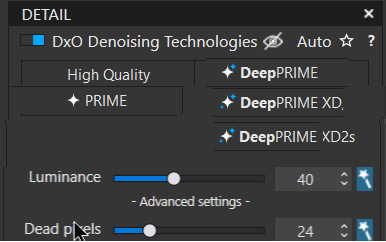

I think the decision to dispense with XD for cameras that were supported for XD2s was likely, in part, a marketing decision. If XD had been left in the mix, users would have to choose between 5 different sets of noise reduction algorithms: High Quality, PRIME, DeepPRIME, DeepPRIME XD, and DeepPRIME XD2s.

For those of use who are very experienced with the development and use of PhotoLab this would not be an issue, but for new users or current occasional users of PhotoLab, so many NR choices could be very confusing. Furthermore, High quality and PRIME don’t make use of a GPU while the DeepPRIME varients all do, adding to the potential for confusion for the uninitiated.

Then there is the additional potential confusion that caused by the fact that unlike noise reduction from other vendors, DxO recommends using the AI based DeepPRIME variants for use on 100% of our images, not just those at high ISO. Experience has shown many of us that doing this with DeepPRIME XD added occasional artifacts that were not present or as obvious with DeepPRIME or DeepPRIME XD2s.

I switched my graphics card from a GTX 1050ti to an RTX 4060 early in 2024. As a result I can’t compare the exports times between XD and XD2s with that older card. However, when I compare the export timings for the same images with XD in PL 7 and XD2s in PL 8 using my RTX 4060, the timings are similar. I can’t say whether that would also be true for lesser cards. Is it your experience that the timings with lower end cards where the GPU is being used are significantly slower with XD2s?

With regard to discontinuing support of some older GPU’s, for XD2s, I am ignorant of the reasons for DxO’s decision. However, I realize that as technology moves on some hardware and some operating systems are left by the wayside. This can be frustrating especially in cases where there doesn’t seem to be a logical reason when older hardware is no longer supported. But, I think most of us realize that this happens frequently as newer software and operating systems are released. It is one of the technology realities we all have to deal with.

Mark

@mwsilvers If properly explained the issue of having so many variants could be handled, e.g.

the hardest part is working out how many dots there should be on the icon symbol to avoid a clash between XD and XD2s

So re-arrange my mock-up and put HQ and Prime on the left and all the DP options on the right and the confusion vanishes and shade out XD2s when not available.

- During testing I felt that XD2 was actually creating more artefacts than I could see with XD but my statement towards the end of this post also suggests that it is removing detail!

- My timings consistently showed that XD2 was generally (slightly) slower than XD on the images I was testing, certainly with “big” batches1

What the new implementation has done is to downgrade a GTX users export performance by 50% with no options, as I experienced with my GTX 1050Ti card. I believe that the issue might be GTX versus RTX related but DxO are as invisible as ever and no explanations are forthcoming.

But PL8 users have no alternative!

As for the lower memory size cards, DxPL have effectively made a blanket ban on cards designated as “too small”. They caused problems in the past when they downgraded cards to avoid the problem they now attribute the new “ban” to!

Actually my 2GB GTX 1050 card worked fine while other users were automatically downgraded to CPU only!! Now my 2GB (please note 2GB card) is being rejected by the 1GB ban.

So we have

- The 1GB rule is actually being applied to 2GB cards!??

- Am I to believe that the programmers at DxO are incapable of handling “on the fly” situations where problems might arise because of the memory available or are they just unwilling to tackle the problem

- The actual memory being used is typically lower than the specified danger limit. I was about to say “in my case”, i.e. Panasonic images but most of my testing has been done with batches of the “Egypt” benchmark image because it appears to offer the hardest challenge for my hardware!

There have been exceptions to my memory usage with my 5900X which has no onboard GPU, i.e. all “normal” monitor activity is routed through the graphics card and typically the usage is over 1GB routinely before a DxPL export is involved when it typically doubles.

@msilvers my point is that the reasons for users now finding that they must upgrade is actually far from inevitable it is due to some decisions made within DxO that I consider are actually unnecessary.

In this day and age making people upgrade their hardware and add to the pile of discarded PC parts for no good reason is reprehensible

- I consider the move lack lustre and unnecessary or just badly implemented

- I needed to earn extra points towards my eco- warrior badge

- Perhaps DxO would like to buy new hardware for all the users who find they cannot export successfully anymore. There are complaints about the cost of DxPL etc. let alone a new graphics card or a new laptop, with an appropriate GPU.

I feel the following need to be addressed

-

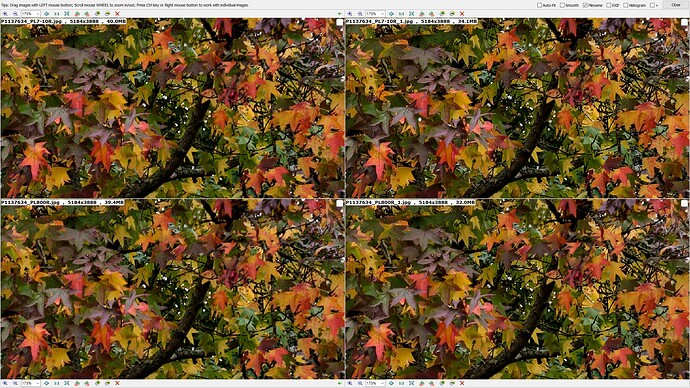

PL8 is adding more CA to my exported images than XD did and neither should be adding any!

-

I still find DP XD2s slightly less sharp than DP XD

-

and if you look at the leaves closely DP XD2S has “cleaned up” some of the markings on the “decaying” leaves.

I’m with you on this Bryan. I have an 8-year old PC with a GTX 750Ti (2Gb dedicated memory) that worked fine with PL7. It took around 30s per image for DeepPRIME XD exports but I could live with that (and still use the PC while the exports were running). Now I’m restricted to “CPU only” with PL8 which takes at least 3 times longer per image and totally ties up the PC while exporting.

I understand that old technology can’t continue supporting new developments for ever, but as you say this seems to be an unnecessary imposition by DxO given that, as far as I can see, PL8 DeepPRIME XD is no different to PL7 DeepPRIME XD. I’d be very happy if DeepPRIME XD continued to be available with my graphics card and XD2 simply greyed out as suggested.

@SAFC01 If XP XD2s was radically different then … , I was going to write, “I might understand the need to abandon the old”, but if that was actually the case I would suggest the only sensible course of action would be to ensure continuity for existing users, i.e. to introduce the new alongside the old!

I understand only too well why DxO wants to attract new users but 2 existing users upgrading is worth almost one new user purchase and posts like this one do nothing to endear DxO to new users who have already felt “cheated” by other software suppliers @Musashi.

Discontinuities are not good for users nor for user workflows, DxO seem to have lost sight of that in this case.

The change in Beta testing for PL8, where every tester was “siloed” from every other tester, had one bad consequence, no ground swell of user approval but no ground swell of user disapproval.

In the past some of the more controversial changes have met with a number of users backing each other up in the Beta Test forum, resulting in unpopular changes to old or new features being modified. But no more.

If users of laptops or desktop system who feel the “so called” 1GB (actually 2GB) limit is unacceptable or that the performance degradation of GTX cards (that is only based on my experience with my old GTX1050 Ti) needs reviewing then please start complaining with Support requests to DxO and posts in this or a new Topic.

I am “lucky” that I “retired” my 1050Ti and 1050(2GB) cards and added 2 x 2060 (Ebay purchases) to the new RTX 3060 I bought early last year. But the old cards provided an opportunity to find out what really happens and it isn’t pleasant.

My suggestion is simply to retain DP XD alongside DP XD2s to allow users a period of grace to sort out their future direction with photo editing software and associated hardware.

But @Musashi please don’t return us to the chaos of the past when the rule was applied without warning. We seem to have survived successfully since those modifications were removed, until PL8.

Regards

Bryan

@ALL this topic has had 1.4K views. If a large number of those views come from users affected by the “so called” 1GB limit then please submit a support request to DxO asking for a return to “normal” service, i.e. the re-introduction of DP XD for all users and a better handling of GPU memory issues to avoid a blanket ban on lower memory GPUs.

Actually handling the 1GB limit test so that 2GB cards are not automatically downgraded would be a good start @DxO_Support-Team.

I have created a ticket for German DxO support. First the lady has not understood the problem. She adviced only to accept the minimum system requirements. She has not understood the discrepancy between used VRAM w/o Photolab (280MB), the existing VRAM (2GB) and the announced minimum VRAM (1GB). Finally, after many posts she said that Photolab 8 is calculating with a constant value for used VRAM (>1GB). Photolab 8 is not checking for the used VRAM in reality.

To me it seems there is potential to tweak the software for only 2GB-VRAM. We just need enough users who complain this issue and announce to not buy the software because of this issue. Loosing revenue is a good motivation. Let’s see, if DxO will offer an update to fix this issue.

@tornanti Thank you I had intended to put in a support request with my findings but “I got lazy” so I will write up my test report on this “beautiful” (dull and rainy) day here is the South of England and report here when that is done.

So why are 2GB cards even being rejected if DxO are not even taking into account and usage by the system before an export is even active.

My 5900X has no onboard GPU, hence the X designation and when exporting with (currently) an RTX 2060 it does go just over 2GB but the 5600G processor fitted before it (with onboard GPU) barely reached 1 GB.

Unfortunately there is also a secondary problem that DP XD2s is also much slower on the old Nvidia GTX cards.

So the ideal solution is

- Apply the “so called” 1GB limit correctly so that 2GB cards are not incorrectly penalised even though they conform to that limit or improve the coding so that no arbitrary limit actually exists and the software can warn or fault an export that may cause/causes issues with NVidia memory usage.

- Extend access to the DP XD feature to all users (not just Xtrans) so that they can choose which to use for maximum performance or “better” noise reduction.

Neither are complicated tasks, with the possible exception of the prevention of issues mid export with the memory usage of the NVidia card but if GPU-X can keep track of memory usage why can’t DxO?

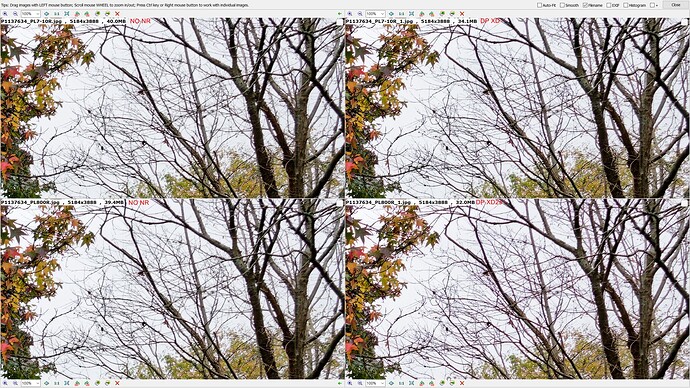

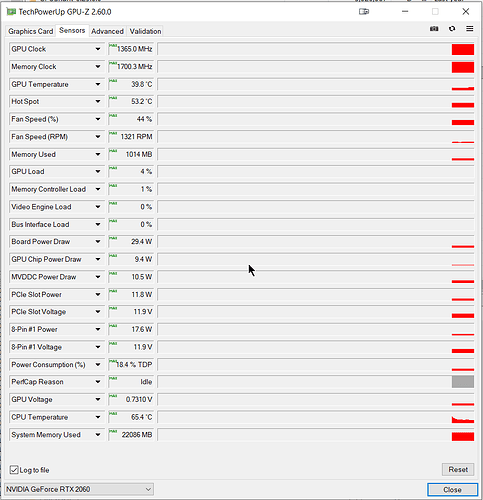

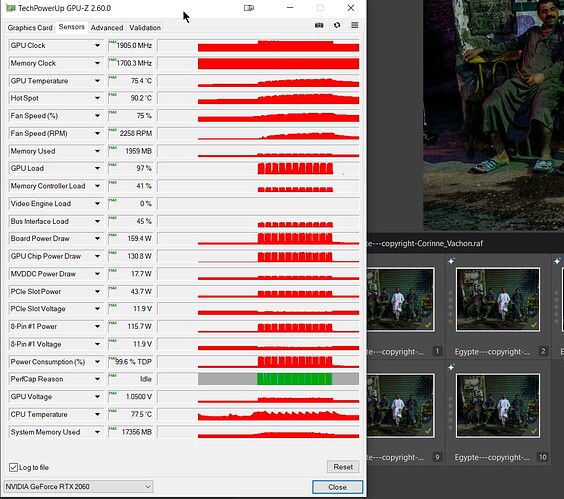

GPU-X is set to show maximum values on this machine, the 5900X with 32GB Ram and an RTX 2060 GPU.

Before the start of any PL exports with PL8.1 on screen and 1014MB of the RTX 2060 are already in use we have

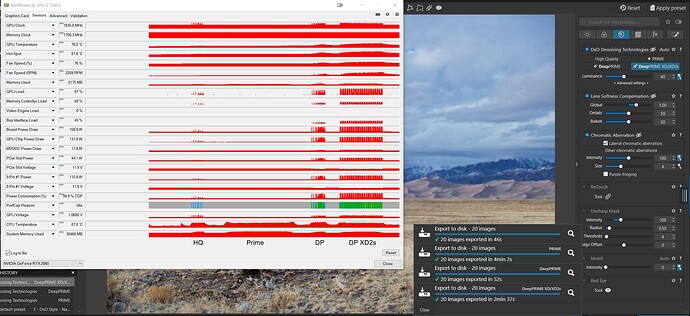

After runs of HQ, Prime, DP and DP XD2s and the real surprise is that there appears to be GPU activity during the HQ export !?

Please ignore the system memory usage, I forgot to disable the RAMdisk which is configured but hardly ever used and forgot to do a test without an Noise reduction of any kind!

DxO seems to suggest that the various measures brought in have been prompted by lots of users experiencing export problems but I don’t see a lot of evidence in the forum reported by users?

If I measure VRAM usage of PL6 only 900Mbit have been used (system + PL6). My graphics card is a GTX 750Ti. GPU acceleration is partly supported only.

@tornanti Although your card will struggle with DP XD() if it satisfies the 1GB “rule” (which it does) then it should be allowed to work at its own pace. Downgrading the product to CPU only essentially makes it useless, i.e. exporting will take forever(ish)!?

Incidentally is your GTX750Ti a 2GB model or a 4GB model?

Another user chose to look at upgrading and encountered the increased power requirements demanded by even a modest RTX card and the final bill comes to more than was originally hoped.

However, such upgrades should be in the users control and not forced on the users by faulty software and for laptop users it will mean a complete machine replacement.

The current faulting of 2GB cards is a bug @DxO_Support-Team which needs to be fixed, preferably with an extension to provide an option for DP XD to all users, alongside DO XD2s, to remove the performance penalty associated with DP XD2s for certain GPUs which meet the imposed 2GB rule (sorry 1 GB rule) but fail to have some other characteristic(s) of the RTX range.

The GTX 750Ti has 2GB VRAM. Deep Prime XD export with PL6 takes 1:30min per image. Better than 8min with CPU only.

2025 I need a new PC because end of Win 10 support. My current one is from 2012!

@tornanti The Win10 thing is an issue for me as well, another supplier forcing a change for no good reason other than whatever the hardware manufacturers/ suppliers “spend” with Microsoft!

The TPM bit is marketing hype, or so I believe.

I have a tiny desktop machine which came with Win 11 and I then needed to “nobble” it to make it look like my Win 10 machines which have Classic Shell (now Open-Shell Menu) installed so they look like “Classic” style

Win 11 seeks to hide the workings of the machine even more but I have managed to turn it back into something more usable for me.

I never use tiles on my PC, fine on my phone and tablets but not on the PC.

If you are happy with DxPL and export enough then aim for a PC with at least an RTX 4060 (or whatever equivalent is available when you make your decision) or add a 4060 to your existing machine but only if the power supply can support it and then take that to your new machine if you build the machine yourself.

I just watched a YouTube video where they criticised Ryzen CPUs, stating that Adobe software runs way better on Intel!

They were looking at high end PCs and video editing in particular!

I will make my support requests later today and cite this and the other Topics where this issue has been discussed.

Regards

Bryan

It has had a long life, my oldest system is the now retired 17-4790K which I built in 2017 I think and added a home built “twin” in 2019 when my oldest son upgraded to a Ryzen Threadripper and I bought the parts from his old system.

That machine is still in use and has a bigger “brother” a Ryzen 5600G built in early 2023, upgraded to a 5900X earlier this year and the 5600G got new parts and went into service around the middle of this year.

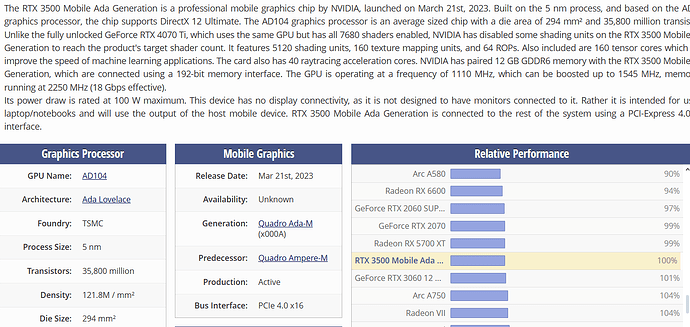

Upgrading an 12 years old PC with i7-3770 CPU with a new graphics card makes no sense. Especially if the power supply does not deliver >700W. It’s time for a new workstation. Benchmark is my business laptop with i9-13xxx CPU with 20 cores, 32GB RAM and a RTX 3500 Ada Gen graphics card. It exports images with Deep Prime XD noise reduction in a few seconds.

I have tweaked all my laptops and PCs with “Open Shell” to get back the Win 7 user interface style.

Maybe, it depends how long you need to wait for the upgrade and you do appear to have an alternative for exporting with your company laptop.

My i7-4790K is only a bit more powerful than your i7-3770, but my 5900X is about 4.4 times as powerful as my i7-4790K and this is what my tests showed (the 5900X is idle most of the time when not running DxPL etc., but the i7-4790K has an average background load of 14-30% CPU and is suffering from a severe memory leakage problem!).

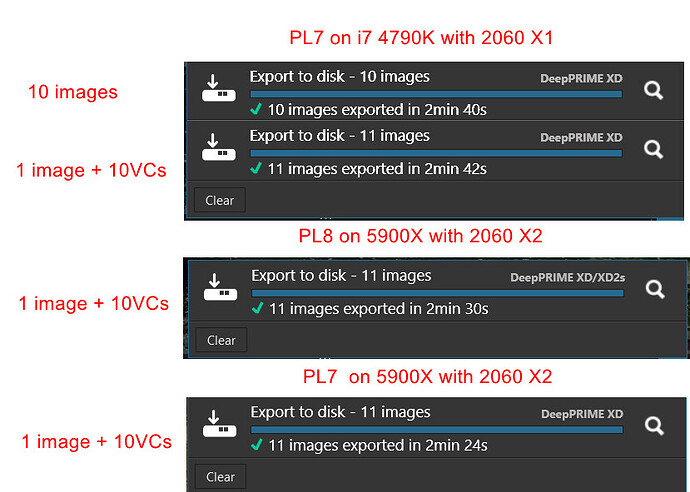

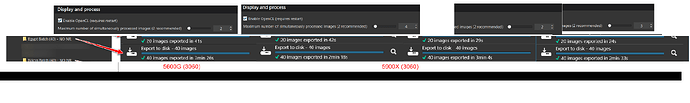

These tests were conducted with the Egypt image from the Google benchmark for DxPL GPU performance. (edited to include PL7 figures from the 5900X)

With respect to your (company) laptop

I believe (read) that the RTX 3500 is based on the RTX 4070 chipset but delivers the performance of the RTX 3060.

and my guess is that the laptop is an i9-13900H which is about 3.30 times as powerful as my i7 4790K and the GPU is about the same as my 3060 which is currently in a drawer (it needs to go into the 5600G for testing before going back to the 5900X).

The 2060s are both Ebay purchases for £120 and £110 and are two fan (X2) and one fan X(1).

My wife is currently still using my old i7-3770 but not for image editing but I could measure its power usage.

With respect to the >700W power supply I was running my 5900X with the RTX 3060 on a 550W power supply for a while and it seemed perfectly happy.

However, the 5600G rebuilt machine was making too much noise with its an old power supply so I tried to source another 550W Seasonic Platinum for that machine.

I failed to find one, Seasonic appears to have phased out that model, but I could get a 650W and that actually went into the 5900X for obvious reasons and the 5600G got the 550W Seasonic.

However, the 5900X seemed perfectly happy with the 550W PSU and the RTX 3060 and it is fitted with 3 HDDs (2 SSDs and 2 DVD drives!)

The RTX 2060 doing PL8 XD2s exports of a short batch of 10 of the Egypt benchmark image yielded the following maximum figures for the GPU

The GPU power draw appears to be 159.4W (edited which increased to 163.1W during the PL7 export run) and for the RTX x060 series I believe the power draw is supposed to be falling from generation to generation, except that might change with the RTX 5060. So fitting a 550W power supply gives roughly 390 watts for the CPU and other components.

A jump to the RTX x070 series would definitely require more power.

In the meantime DxO should fix the 1GB limit bug and not by simply changing the wording and making it a 2GB limit, although 2GB cards should arguably still pass that criteria!

Plus if they restore DP XD (i.e. re-open the capability to all users) and apply a sensible limit then PL8 should not perform as badly on GTX cards as it currently does, plus users can choose DP XD2s if it actually improves certain of their images…

I have a question that’s a bit off topic, but I ask anyway… ![]()

I am curious if you can notice any real speed difference for PL and other software between the Ryzen 5600G to 5900X ?

I have a PC with an overclocked 5700G at permanent 4,1GHz and has sometimes wondered if I should replace it with the 5900X

@TorsteinH It is slightly off topic and the author of the topic will be receiving all the posts whether they are pertinent or interesting or not but …

Your 5700G is a bit faster than my 5600G (24544, 3282) versus the 5600G (19872, 3190) versus 5900X (39103, 3470) using the Passmark.

I feel that the 5600G was well matched to the 3060GPU and a nice change from my old i7-4790K.

The differences in CPU contribution to DxPL is like this (see snapshot below) where the NO NR figures are essentially free of any GPU involvement particularly with the 5600G and 5700G with their onboard GPU (no use for exporting but fine for driving the monitors)

This is for DP XD (PL7) for a batch of 40 of the Egypt image.

The 5900X has fallen in price since I bought it and can now be purchased for £199 in the UK.

But it may need additional cooling and may put the power supply under pressure but will improve one element of the DxPL export process and should help with editing activities and is certainly noticeable just doing any task.

In my case it initially went in with the 5600G cooler and a 550W Seasonic Platinum power supply and seemed fine but the CPU fan revved up while exporting so I replaced the cooler with another air cooled unit but with twin fans but the CPU is running hotter than I like, so I might have to rethink that!?

When I rebuilt the 5600G I decided that the power supply was too noisy and tried to buy a new Seasonic, as I stated above, and the upshot of that was that the 5900X now has a 650W Seasonic Platinum and the 5600G is back on the 550W Seasonic Platinum.

If you have the cash, and an adequate power supply and an adequate cooler then either wait for the price to fall even lower or take the plunge now but you might have to budget for more than just the CPU.

PS:- There were other discussions about performance, heat etc, here PL8.2 (Win) Potential DP XD2s change in speed but errors when processing VC batches

Thank you for well put answer. I will probably postpone this upgrade for quite a while, if ever… Not because my power supply is not big enough at 650W, but because it probably will cost more time and money than it’s probably worth. (I will need a new CPU cooler as well.)

@TorsteinH It is not a given that you will definitely need a new cooler my original on the 5600G is the middle one of the group below

and the earlier one below that was fitted to one or other of my two i7-4790Ks.

I replaced the H412R (now back on the 5600G) with the Assassin but could only realistically fit the fans in a slightly odd way, effectively with the first (push) fan in the middle and the second (pull) fan at the back, not as shown in the snapshot.

I also have a single unit water cooler (but 2 fan, push pull) but would need to find the old bits to see if it could be fitted to the 5900X. The water cooler kept an old heavily overclocked Intel i7 2700K under control. At 3.5Ghz the 2700K takes 95W and I was running it at 4.5GHz, it would actually run Prime at 5GHz but would soon collapse.

Obviously the 5900X is very much from the older breed of AMD processors (AM4) and the board only comes with PC1e 4 NVME and GPU slots and typically only one of each but not changing the motherboard should mean no (or limited) licence issues.

To me that is one of the biggest problems with upgrading, sorting out licences.

Fitting the 5900X was easy but sadly I didn’t test the temperatures with the H412R fitted but temperatures are currently running at 85 - 89 degrees C when exporting from DxPL with the Assassin cooler.

However, the total processor usage is currently 3% and the CPU temperature is showing as 60 C so something isn’t right. A job for tomorrow, sorry later today.