Good morning,

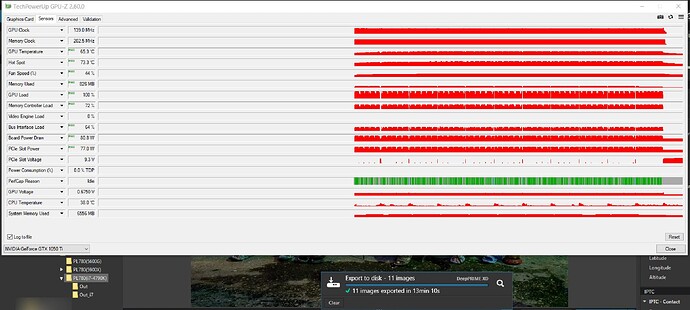

First, my config: Intel I5-2400 / GeForce GT 1030 2GB / 6GB DDR3 / Windows 10 / Updated drivers.

With Photolab 7, no problem using DeepPrime XD in GPU mode (it’s “slow” compared to large configs, but much faster than in CPU mode).

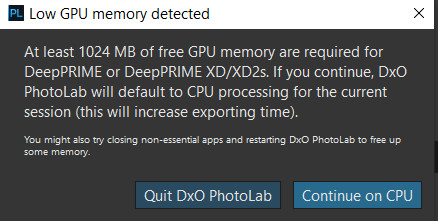

With Photolab 8 (trial version), I get the following message when starting up (translated from French):

Title “Low GPU memory detection”, subject "At least 1024 MB of free GPU memory is required for DeepPrime or DeepPrime XD/XD2s. If you continue, DXO Photolab will use the default CPU processing for the current session (this will increase the export time).

Available buttons: “Exit DXO Photolab” and “Continue on CPU”.

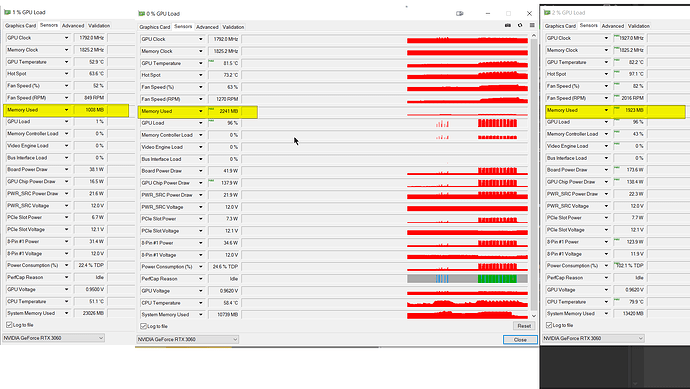

In the Windows Task Manager, “Performance” tab ==> “GPU”, only 0.3 Gb of memory is used (== 1.7 Gb of memory remains).

In the DxO.PhotoLab.txt logs, I have the following error (in Franglish :)): “Windows Error when calling Device Initialization : Paramètre incorrect.”

2024-09-18 19:12:18.875 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | Reported DXGI device: NVIDIA GeForce GT 1030

2024-09-18 19:12:18.875 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | DXGI Device available, memory : usage 53596160, current reservation 0, budget 1765693440, available for reservation 934778880

2024-09-18 19:12:18.875 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | Reported DXGI device: Microsoft Basic Render Driver

2024-09-18 19:12:18.875 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | DXGI Device available, memory : usage 0, current reservation 0, budget 2879402804, available for reservation 1519684813

2024-09-18 19:12:18.942 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | InferenceDevice: selected inference device is NVIDIA GeForce GT 1030 (1.9 GiB, type=Discrete GPU, id=0x8062)

2024-09-18 19:12:18.990 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | Using adapter NVIDIA GeForce GT 1030

2024-09-18 19:12:18.990 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | VRAM Reservation is enabled: 500000000

2024-09-18 19:12:18.990 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Error | Windows Error when calling Device Initialization : Paramètre incorrect.

2024-09-18 19:12:18.990 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | Reported DXGI device: NVIDIA GeForce GT 1030

2024-09-18 19:12:18.990 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | DXGI Device available, memory : usage 53596160, current reservation 0, budget 1765693440, available for reservation 934778880

2024-09-18 19:12:18.990 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | Reported DXGI device: Microsoft Basic Render Driver

2024-09-18 19:12:18.990 | DxO.PhotoLab - 13120 - 1 | DxOCorrectionEngine - Info | DXGI Device available, memory : usage 0, current reservation 0, budget 2879402804, available for reservation 1519684813

Thank you for your help