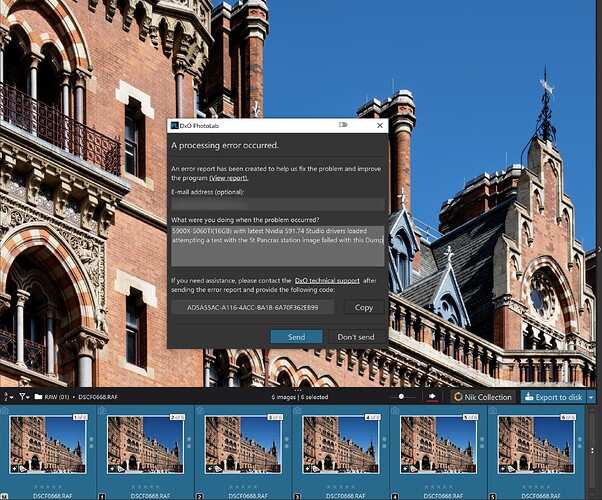

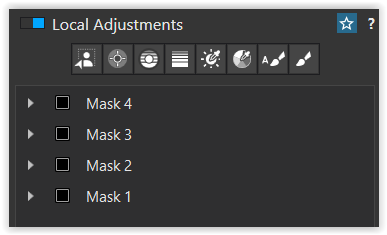

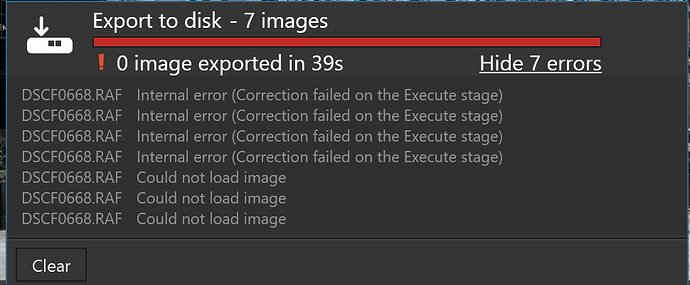

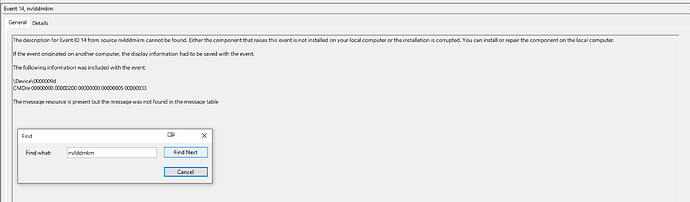

@Wolfgang With the DOP you supplied I got a full house… of errors (with XD2s)

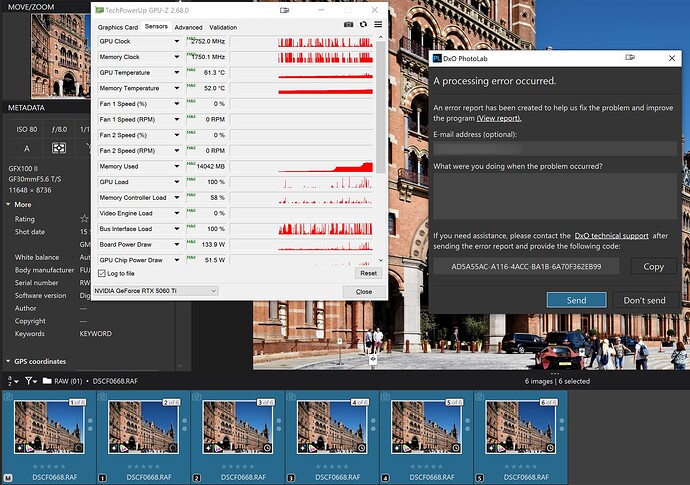

Your DOP has the first image, the [M]aster, with Standard NR and all the rest XD2s so I changed them all to NO NR and nothing got any better

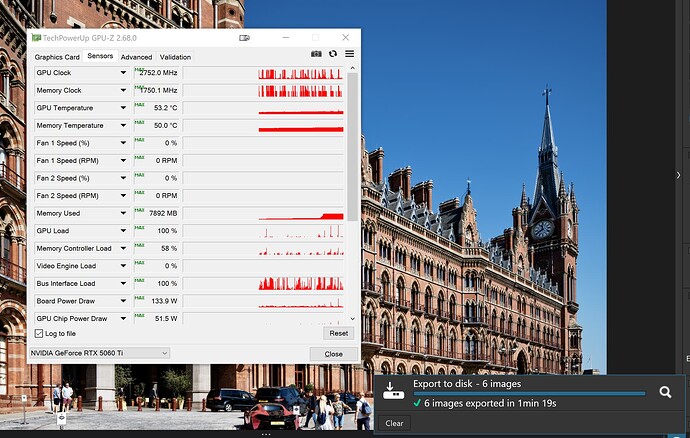

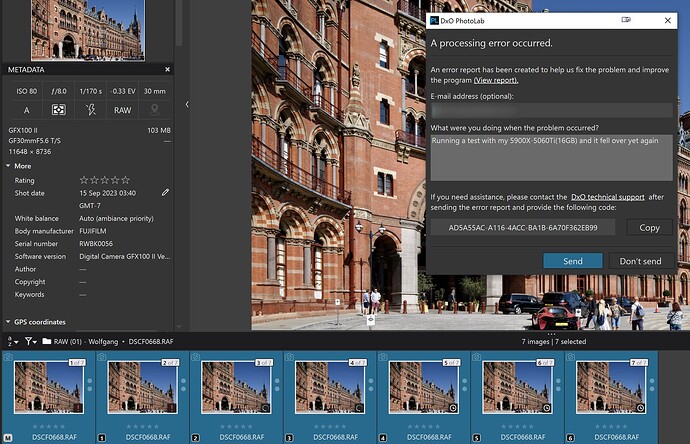

I really don’t know what is “special” about my system The test images are held on the N:\ drive, a PCIe 4 NVME, and the outputs, given that with the St. Pancras image that is basically none, also go to that drive, which also houses the database and cache.

I am running Win 10 on the 5900X(32GB)-5060Ti(16GB) system.

I copied the mages to the F:\ drive, an HDD just to see if that changes anything and it didn’t (as expected)

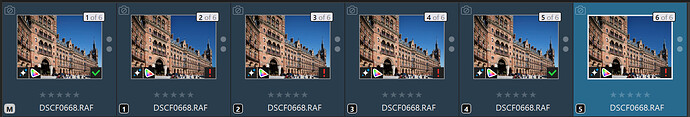

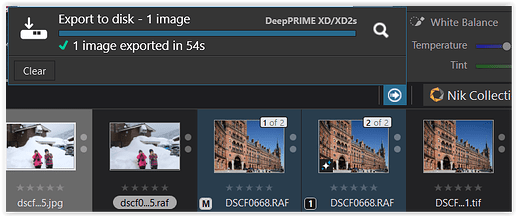

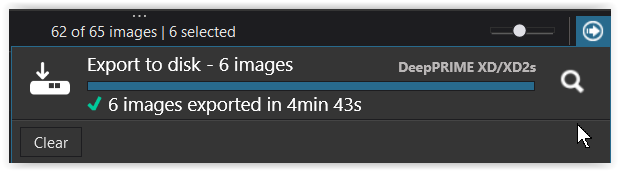

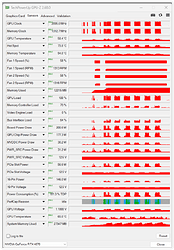

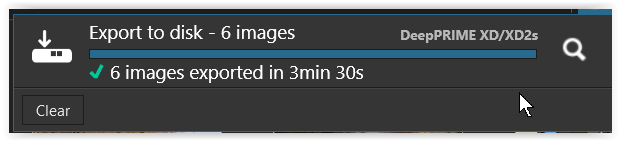

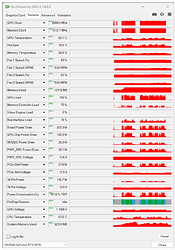

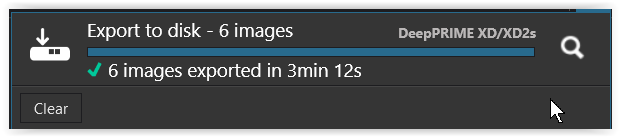

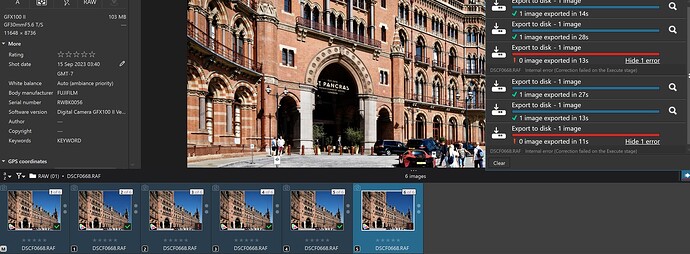

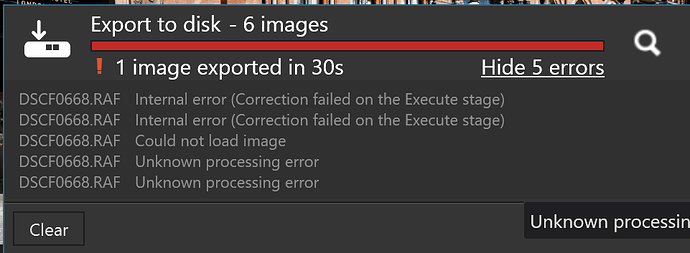

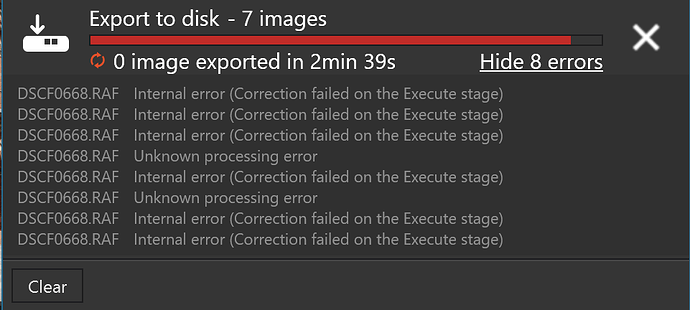

So I cleared the database and reran my test data (on F:) and got

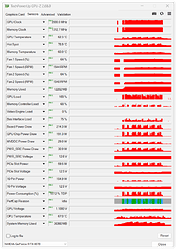

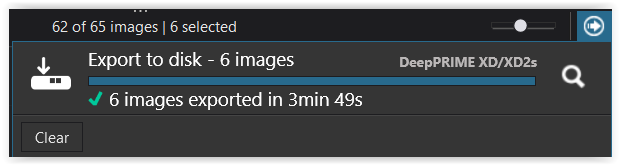

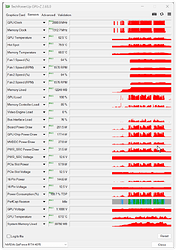

and with your test I got (you guessed it) actually a little worse because it dumped as well

@IanS I bought the 5060TI(16GB) when it became clear that I was never going to be able to use the 2060(6GB), so my 3060(12GB) went into my 5600G (from the 5900X), the 2060(6GB) went into the “retired” pile, and the 5060TI(16GB) went into the 5900X.

Where others were having problems the 5060TI(16GB) wasn’t and any remaining problem images started working with successive releases on PL9 and new copies of Nvidia drivers.

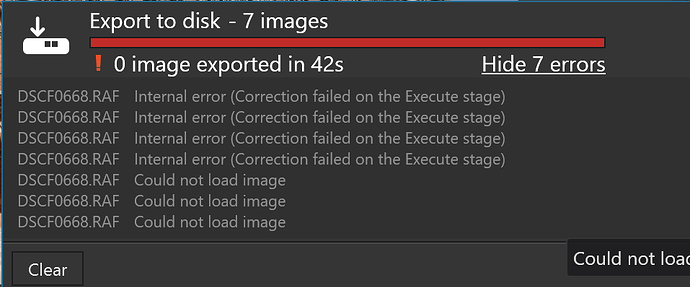

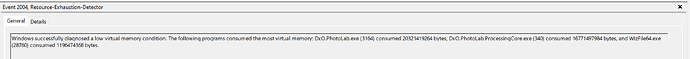

All was going reasonably well until @Wolfgang discovered the monster St. Pancras station image and I started to have problems with the 5060Ti(16GB) in particular.

I still believe that the 5060Ti(16GB) offers the best compromise between VRAM (16GB) and money required but for one reason or another this image keeps failing on my machine, while others with “lesser” and greater machines appear to be O.K.!??

I will revert to earlier drivers later today or tomorrow and see if they are any better.

I am afraid that not all the problems can be laid at the door of VRAM usage and Nvidia drivers, DxO still has work to do, or so I believe.

If the price is right secure the 5060Ti(16GB) before the price rises.

In the U.K. the Asus 5060TI(16GB) appears to be available for £399.00 which was the price for most 5060Ti cards when I was looking but I managed to secure that Asus card for £369.99, which was the lowest price I could find for the 5060Ti(16GB) at the time.