Hi

New here

My 10 year old pc was stuggling with pl8 and now with pl 9 it is nearly unusable, would the dell ulta core 7 265k, rtx 5070 12gb and 32Gb ram be okay for fuji XT-3 raw 30-50mb images especially for denoising and new ai masks and would it be nearly lag free for general editing.

I realize i might not get an exact answer.

Thanks in advance

Welcome to the DxO USER Forum, Rob.

This is the key component (GPU) … and that should be plenty of grunt !

The only issue is problem PLv9 is encountering with latest Nvidia drivers - See here.

@John-M I am afraid with the current fiasco, which might be resolved with better coding from DxO, I would be less concerned with GPU processor and more with GPU memory!

My RTX3060 seems to hold up reasonably well but that has 12GB and I have seen with sky selection and XD2s denoising GPU memory usage reaching 11GB.

If it was me I would be looking to upgrade to a 16GB GPU if the price is not too high., i.e. without AI 8GB should be enough, with AI 12GB might just be enough now and may be too much after DxO do some work but I was only testing with a single sky AI selection not using AI extensively!?

Thanks

The 5070 ti 16gb is the alternative, which is getting a bit expensive, i might hang back a while and see if dxo sort it out

I’m running PL9 with an RTX 4060 which only has 8 GB of memory. While the AI Mask application and exports is running a bit slow on my old Windows 10 machine, It is still reasonably quick. The big fix for me was replacing the current RTX Studio driver with 572.83 from April 2025 which resolved almost all performance,issues, internal errors, and crashes.

I believe AI masking still requires some additional performance tweaking by Dx0. For a newly created and very resource intensive tool the need to that is not surprising and not unusual.

A recent example of this, with which I am personally aware, was the original local implementation of generative AI in ON1 Photo Raw.

ON1 initially pissed off a huge number of users who quickly tired of the software generating bunnies or hearts or other completely irrelevant and poorly structured objects in the middle of replaced areas of their images. Within the next several weeks many or perhaps most of the initial problems with that tool were resolved. I am sure that ON1 had tested significantly before releasing the software and were not expecting their local generative AI feature to fail to work so spectacularly.

Mark

That upgrading only works for PCs not laptop’s as I have

Only ayear old and still being sold as a top of the range Dell

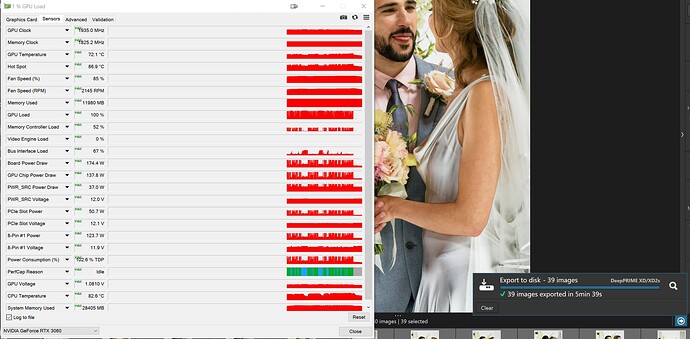

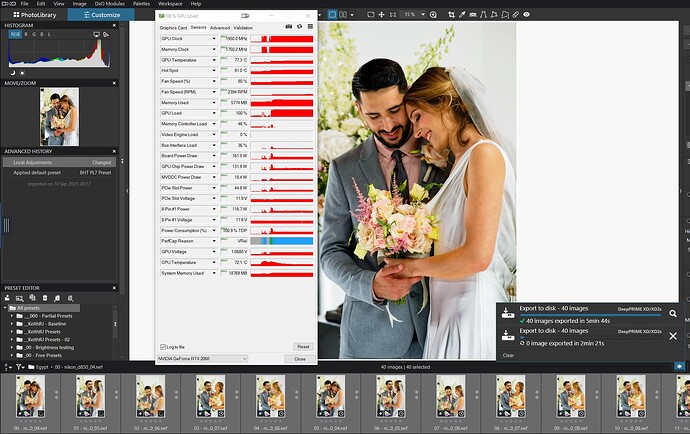

@mwsilvers The following runs were on my 5900X with a 12GB 3060 card. The AI mask was around the wedding dress and had been copied to all 39 images.

This particular test reached the highest amount of GPU memory used.

The second test was with a single image and multiple selections (about 3 or 4) and not quite so much memory was used.

I haven’t formalised the tests yet so that I can get a set of “consistent” results but where I used to consider DxO’s recommendations as OTT I don’t feel that any more.

@John7 I am sorry for any laptop users and I have yet to attempt to repeat the tests on my 5600G which has a 6GB RTX 2060.

Am I being unnecessarily pessimistic, probably but if anyone if off buying new kit then it might be worth waiting a little longer.

I get the feeling DXO are needing, due to how they are programing, much higher specifications than other firms producing simpler programs. The very rapid incress in spec’s in the last few years is beyond what the simpler improvements (even if they actually worked) of the program. I get the feeling computing power is taking the place in good programing. People like me have to make the decision do you e stay on the endless cycle to increasing computing power at much greater cost than the annual cost of the programs that demand the greater computing power in place of good programing or hop off the cycle. I must admit I had been unsure of going for 9 but HEIC looked great one program for all processing. Pity reality hit when its implementation is unworkably poor so far and then the AI mess on top!

I am still using an i7 6700K (albeit overclocked at 4.5 Ghz thanks to undervoltage and a Noctua cooler), 32 GB RAM and an RTX 3070. PL9 performance is perfectly acceptable.

The plethora of bugs isn’t acceptable of course, so I am waiting to pay for the upgrade, but performance is quite OK indeed even at the current stage.

If it helps, I’m still using an E3-1231 v3 (launched 2014), 32GB RAM and a 1080Ti.

I wouldn’t say performance is lightning fast in v8 or v9 but it works acceptably (as you say - ignoring the bugs, and my AI masking doesn’t work at all as I’m not downgrading drivers for one program).

I’d not be too concerned about that, Bryan … If DxO can’t get PL working efficiently with GPUs configured with at least 8GB (or 12 at most) then they’d be limiting their customer base VERY significantly. I reckon it’s just a matter of giving them some time to sort out a few issues (esp. with Nvidia devices, it seems).

@Lucabeer My i7-4790K, the i7 6700K and the E3-1231 K are roughly the same passmark score and that is 10,000 or less. The last release of PhotoLab I installed on my i7 was PL7 and it runs with a 2060 (6GB).

The 1080Ti is potentially a bit faster than the 3060 and the 3070 about 1.45 times as powerful as the 3060, at least according to gaming benchmarks. My 5900X is paired with the 3060(12GB) and my 5600G is paired with a 2060(6GB).

I was concerned about the GPU memory used in my tests documented above because I was not sure what driver was installed at the time of the tests. The 3060 is now running on 572.83 but I think that was installed after the tests shown above.

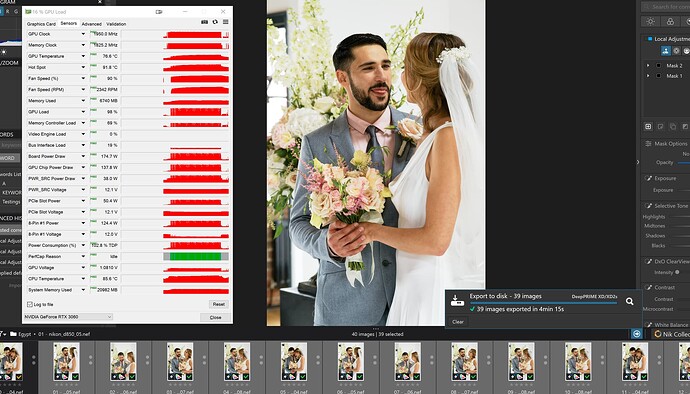

A rerun gave the following

and this shows a lower GPU memory usage on the 3060 of 6740MB. A big improvement on the earlier Test figure

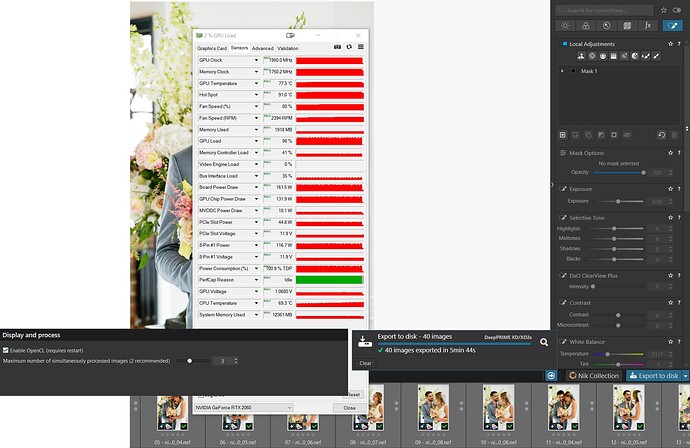

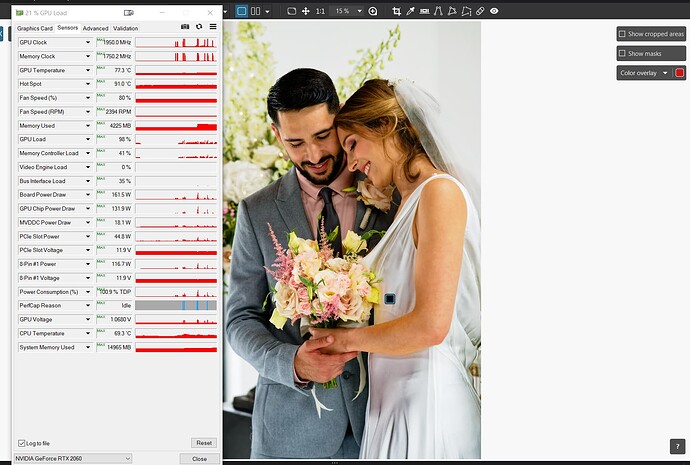

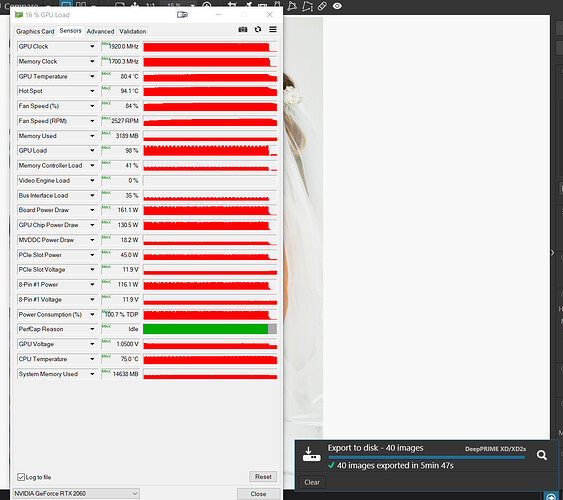

So last night I switched to my 5600G with a 2060(6GB) GPU and loaded the 572.83 drivers and ran some tests at both 2 & 3 export copies and got almost identical results for GPU memory usage and both were around the following figure just below the 6GB ceiling (and I managed to select all 40 images this time).

This morning I checked the idle memory usage figures on the 5600G (which has an IGPU but is attached to three monitors, i.e. one more than the IGPU can handle) and got 622MB with PL9 not running and 1057MB with PL9 running.

PL9 opened on the directory I had been testing so I simply selected all images and started a 3 copy export after changing the output location to N:\ (an NVME) rather than F:\ (an HDD) and got this “shocking” result

But as soon as I selected an image PL9 went into it rendering stage and took a long time (both the new display options are selected) and this is what happened to the memory usage

Also on the 5600G I had made an AI selection but not actually made an edit so I Maxed out ‘ClearView’ in Local Adjustments and then copied to all the images and then got the following for the export or rather I didn’t get anything!?

It maxed out the GPU processor and spun its wheels, reducing it to 2 copies had no impact except that it started with three spinning icons instead of two (or one then two).

Restarting and reducing the ‘ClearView’ setting to 50 and it still “spins its wheels”.

Basically my test directory on the 5600G is now useless.

@John-M DxO have truly got some work to do and mixing PL9 AI with exporting isn’t really going to work on a 2060 with 6GB GPU and currently pushes an 8GB graphics card and that is without any potential changes to denoising, XD2s is a bit “long in the tooth” isn’t it?

As a final desperate measure I turned off AI (eventually) but to turn off AI you effectively increase the memory usage to clear the AI settings!?

It still didn’t work, i.e. exporting was simply “stuck”.

Restarting PL9 yet again and finally an export without AI started and completed successfully