I want a tif from a sony a7rv with no bit changes at all for some technical analysis of TV light levels. Are there appropriate settings in dxo so the 10bit values from the sony are exactly represented as taken in the 16bit tif export without any scaling or non-linear transform?

You might want to check out RawDigger. It’s an app that can be licensed to provide different levels of analytical capabilities.

Anyways, data in a raw file is monochromatic. Colour only comes with the the conversion to rgb. If you want to see how raw looks as a monochromatic, best resolution image, try this:

Well generally this can be a bit of a problem. Most cameras that shoot raw don’t shoot 16-bit, but lower than that and than the raw converter such as Adobe works inside the 16bit container. This is probably the true for DXO but I can’t be sure. Hence when you shoot raw, soon as you start working with the raw inside converter you are working with larger container. This does not mean you are loosing data per se, but its not one for one accuracy. Usually it does not matter.

If you want to convert from raw to 16 bit tiff , probably you will not get the same data as camera captures, but that’s just how it works.

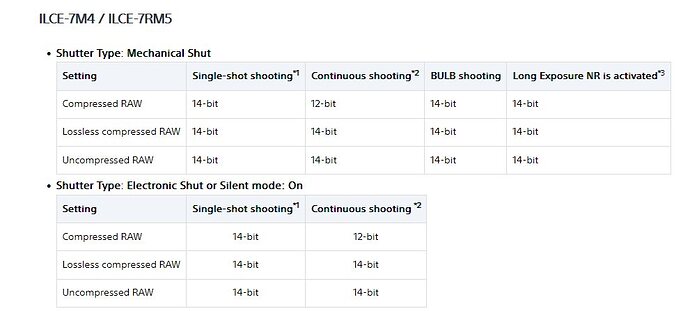

As far as I know there is no way to shoot 10-bit on Sony a7RV

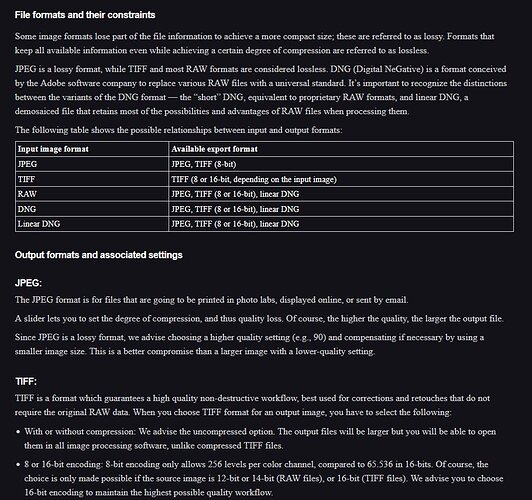

TIFF (8 or 16-bit, depending on the input image)

TIFF is a format which guarantees a high quality non-destructive workflow, best used for corrections and retouches that do not require the original RAW data. When you choose TIFF format for an output image, you have to select the following:

With or without compression: We advise the uncompressed option. The output files will be larger but you will be able to open them in all image processing software, unlike compressed TIFF files.

8 or 16-bit encoding: 8-bit encoding only allows 256 levels per color channel, compared to 65.536 in 16-bits. Of course, the choice is only made possible if the source image is 12-bit or 14-bit (RAW files), or 16-bit (TIFF files). We advise you to choose 16-bit encoding to maintain the highest possible quality workflow.

Sony a7IV I think has lossy compressed format if you want to shoot Raw in burst mode at the highest frame rate, and drops to 12-bit mode. Lossless format in raw I think its 14 bits. RAW converters usually work in 16-bit, but I’m not sure if DXO follows the same convention.

I don’t think you can shoot at 10-bit.

As for DXO export options. These are from the manual.

Basically you can shoot with Sony 12 bit lossy RAW or 14-bit lossless raw. When working with RAW in DXO or Camera raw probably they will work in a larger container, 16 bit, I know that is how Adobe works, so I assume DXO does the same. When you export from DXO in TIFF you can choose 8-bit or 16-bit. Choose 16-bit, that will ensure least loss of data, but it won’t be real 16-bit of raw data, because Sony camera and most cameras do not capture in 16-bit raw.

If you really want raw raw and you’re doing some technical analysis you may want to think about uncompressed raw and extracting the bits yourself. Not sure how to do it myself but if it’s really uncompressed you may be able to shoot some test files to try to figure out how the data is structured.

I doubt any tool outside of raw digger is gonna be able to give you the real raw dataset without any kind of attempt to debayer the image.

Monochrome2DNG can, as well as DCRAW, which has sadly been left as is for a while.

I searched for the specs of this Sony but the bit depth in raw is 14 bit. The 10 bit value is referring to video.

A lower bit value can be translated in a higher bit value but the steps will be that of the lower bit value. Just for comparing it will not give you a higher precision. In editing it will give you some advances.

George

a lot of tools can do this - RPP raw converter, dcraw can dump, MATLAB / OCTAVE can read ( directly or after conversion to /non-linear/ DNG ), etc, etc

Platypus was right. RawDigger ($40) will convert Linear RGB from the Sony to Tiff with 14 bit resolution (16K steps) in a 16bit tiff. I was able to load that into my Maxim/DL astrophotography software and average the frames as desired. What I was trying to do was to compare readings taken of my LG OLED at near black to the readings from my i1Display Pro Plus colorimeter to see if they were similar near-dark (which they are). Thank you all for the advice.

Web

Dcraw or the example programs from libraw seem more suited.

To get your values as is in raw , you need ‘no look’ (completely linear response), no camera calibration what so ever , no white balance (so everything is green).

DxO will default to ‘as shot’ for example when white balance is disabled , which is absolutely NOT the same as ‘no white balance applied’.

Rawtherapee and/or Darktable can do it as well.

But you always get things likeraw black levels and scaling from 10/12/14 bit to full 16bit rgb values.

The libraw example programs have a tool ‘4channels’ which extract the r, g1, g2 and b channels of your raw to separate tiff files. With the option to do no black levels scaling.i think this also leaves the max values alone. (So a 10bit recording will yield at most a value of 4095).

Question back : arw file is sony right ? Is there a model and mode that is 10bit ? I only know that they sometimes switch back to 12bit in certain modes / settings but are 14bit otherwise.

What do you mean with this?

That’s 5400K when I’m right.

George

Images like this: Highlight recovery - #12 by platypus

What do you mean with this?

If you look at your raw data without white balancing applied , it looks horrible. Your camera does not record values already at daylight or any other usable white balance. Often to get close to something resembling ‘daylight’ the red needs to be multiplied by 2 and the blue by 1.5, there abouts (ofcourse differs per sensor ).

Open your raw in darkrable or rawtherapee and disable everything , including white balance. That is your bit perfect raw file (not even :))

That is your bit perfect raw file (not even :))

…as seen in FastRawViewer set to UniWB. Sidenote: If you could set the camera to the Temp/Tint values as seen in FRV’s footer, the histogram would show close to a RAW histogram. You’d have to establish the values with your own camera though, the above is from a Canon EOS 5D.

Often to get close to something resembling ‘daylight’ the red needs to be multiplied by 2 and the blue by 1.5, there abouts (ofcourse differs per sensor ).

That would mean that the maximal values in RAW for red and blue would be 255/2 and 255/1.5. Otherwise I’ll get clipping after applying this color temp.

George

No because 1) the ranges in your raw file aren’t 255 or anything like that. Often 12 or 14 bit (not 8) but every camera has different black levels / white points , even depending on iso or shooting mode.

And, the processing inside raw software isn’t limited like this at all. 99% of the time the processing is in floating point , so that ‘has no limit’.

the processing is in floating point

It seems it’s not from what I’ve heard in the live streaming presentation.

Talking about HDR they said that this was for now beyond the possibilities of photolab because HDR needs more precision than photolab have.

And HDR needs floating point precision.

That would mean that the maximal values in RAW for red and blue would be 255/2 and 255/1.5. Otherwise I’ll get clipping after applying this color temp.

True, even though many more bits are used in actual calculations in PhotoLab.

processing is in floating point , so that ‘has no limit’.

Not quite, there are limits in floating point calculations too, even though they can be pushed way further than with integer calculations. Nevertheless, at some point, the flop number will be converted to manageable size and no matter how far the limits were pushed, 50% of max is 50% no matter whether you count toes or stars.

Those who are interested in those multipliers (example) can look them up on dxomark.com.

Anyways, the OP’s question seems to have been answered. ![]()

I wasn’t talking about hdr output.

Hdr needs soooo many things to get right. Proper image viewers for one.

Look at the metadata of your raw file , the white balance multipliers are there . That’s the first step of white balancing (it can get pretty complex from there if you start thinking about CIECAM and stuff like that ).

If a graphics tool internally works with floating point or not is besides the case : that’s what a raw file is:

Monochrome light sensor values. In the (linear) colour space of whatever your sensor records , in need of calibration (primaries , camera calibration , matrices ) in need of white balancing , etc…

Dcraw can output in ‘raw’ colour space (with no colour space transform) , with no whitebalancing , with no alteration of your data. Then you see how raw your RAW is :).

Libraw has a tool ‘4channels’ (I believe this is all only for bayer sensors ) that writes a tiff of the raw values inside your file , for the r, g1, g2 and b channels.

That are the ‘bits’ in your file.

To get something viewable , there is no ‘bit perfect’.

Do you mean to get the output just like the jpg in camera, use the raw processing software of your camera manufacturer to write a 16bit tiff.

Hence the ‘has no limit’ . If someone is stuck thinking in 0 - 255, then floating point is most simple described as ‘no limit’.

That the float format looses precision the higher you go , and eventually has a limit is more the programmers way of looking at it :).

On pixels.us, I always describe it as thinking in 0% to 100% (because some dont get the 0 to 1.0 range even). Its very much possible for software to have values higher than 100% while processing / editing.

But eventually you have to display the data or write to a file, and then everything needs to be between 0% and 100% again.

In a way this is even true when writing hdr files , it’s just that 100% has a very different meaning when compared to sdr. Anyway, hdr is still in its infancy when it comes to this (yes, still) so I try to leave it out of the conversation.

In the photography world people also think of the old bracketing and ugly effect for processing that we called hdr before . So enough confusion to go around :).

No because 1) the ranges in your raw file aren’t 255 or anything like that. Often 12 or 14 bit (not 8) but every camera has different black levels / white points , even depending on iso or shooting mode.

For 12 bits it will be (2^12)/2. For 14 bits (2^14)/2. For floating point 1/2.

You are just showing the uselessness of that raw data. Raw data is input/sensor based. As a photographer I’m output oriented: monitor/printer.

George