Hmm, be aware that the histogram (and blinkies) displayed by most cameras do NOT take their data from raw, but from the jpeg preview, which depends on the picture style, the WB and the colour space that you set in your camera. Over-exposure can happen in one or more of the r, g or b channels resulting in colours that can out of gamut or simply blown (usually in highlights)

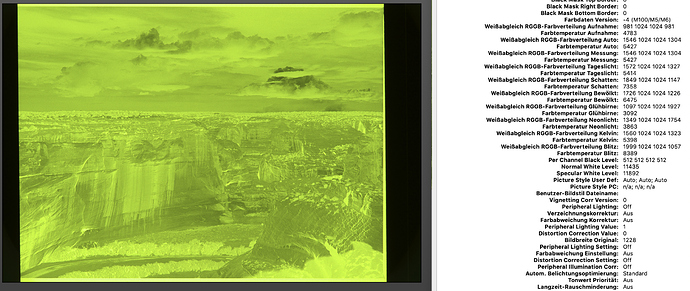

The close to best way to make sure that your sensor’s photosites are not flooded is to use UniWB, a custom white balance setting that will provide outlandishly green jpgs and a histogram that translates raw data with preferably equal multipliers - check the wb multipliers (not quite perfect here) below.

Ways to get UniWB can be found here GUILLERMO LUIJK >> TUTORIALS >> UNIWB. MAKE CAMERA DISPLAY RELIABLE or wherever you find it yourself.