if you are talking about image preview then FRV has options to tune sharpening and flip between different levels of it that you can even assign to a hot key

It’s because FRV is actually a demosaicing tool based on LibRAW LLC, a free open source RAW engine. So, it will give the same results as any RAW converter based on that engine. FRV is just a graphic user interface to LibRAW collecting parameters specified by the user and used by LibRAW to produce a readable image. It appears to be displaying images faster than a more sophisticated RAW converter just because it’s doing less. On the FRV product page, the assertion “Dispelling a Myth: Viewing RAW is Impossible” is somewhat misleading. This is not a myth : you can’t display a RAW image without demosaicing it first.

That said, culling with FRV instead of DPL might be actually faster. Maybe DxO could implement a faster “culling mode” in DPL by limiting some of the processing done when browsing images ?

You’re a DSLR user? Please don’t feel offended, but using your camera only “occasionally” for one week going on safari will not cure the sharpness problems. I don’t want to extrapolate from my experience with long focal lengths to yours. But some sharpness problems I had were a lack of experience, lack of understandig the AF and not at least, not setting up the AF microadjustments properly. Which is a pain to do, especially with long lenses: few tripods sturdy enough and finding a focus target close to ∞ without too much haze, dust and moisture in the air in between is another challenge. Unfortunately software can’t compensate my shortcomings, and there’s no shortcut to learn how to handle AF.

of course you can - just look @ rawdigger from the same authors ( of LibRaw and FastRawViewer ) … it does not do demosaick ![]()

it does it faster because of a lot of handcrafted GPU code used to do this …

LibRaw is to read data from raw files, it is not to intended for demosaick or to do color transform, etc which are the major functions of raw converters … so NO - it will NOT give the same result , because different raw converters will use their own demosaick, color transform, etc, etc

“… Additionally, the LibRaw library offers some basic RAW conversion, intended for cases when such conversion is not the main function of the LibRaw-using application (for example: a viewer for 500+ graphic file formats, including RAW). These methods are inherited from the Dave Coffin’s dcraw.c utility (see below the “Project history” section); their further development is not currently planned, because we do not consider production-quality rendering to be in the scope of LibRaw’s functionality *(the methods are retained for compatibility with prior versions and for rapid-fire testing of RAW support and other aspects)…”

So, I’m curious. How to display an image from RAW data where each individual photosite information (which is only a luminance value) has not been submitted to the interpolation process named “demosaicing” and converted to an RGB value ?

@JoJu - I have no problems with sharpness in my pictures. I wrote that I have sharpness problems when displaying in FRV

read please again what @Pat91 wrote

He means - on LibRAW engine I believe

is not the same as

![]()

just like with demosaicking - you simply convert a DN from a sensel ( individual photosite, no demosaick ) to arbitrary “red”, “blue”, “green” or “grey” or whatever else color using some common sense approach ( for example you might not want to display a sensel behind CFA which lets in mostly “green” spectrum as “blue” color ) … are you curious about using a word “arbitrary” ? input devices do not have a gamut, whatever they registered are not colors and there is no one single right way to execute color transform ( so they are all “arbitrary”, granted with some common sense approach of course - for example using various targets, etc… but you always come with different camera profiles - different color transforms - because there is NO single right way to do it… in principle that is - consider a “capture” metameric failure resulting from a specific choice of CFA filters, for example - assignment of a color to DN vector as you understand hopefully is 100% arbitrary choice because your camera was not able to discern between two real world colors ) - and it does not matter whether you demosaicked data or not - consider a sensel behind “green” CFA as a a triplet of DNs with 2 numbers = 0 and one number in “green” position as what DN from raw file is , and so on

so you execute color transforms on vectors like (0,DN-“green”, 0), (DN-“red”,0, 0), (0,0, DN-“blue”)… does it make it more clear ? instead of operating on vectors like (DN-“red”-post demosaick,DN-“green”- averaged, post-demosaick,DN-“blue”-post demosaick)

DN stands for “digital number”, reflecting whatever data we have in raw file written by camera’s firmware for a each sensel

yes, different raw converters using the same library to extract data from raw files ( that is what libraw is for… NOT for raw conversion, but to let other programs to read the data from raw files encoded in various raw file formats) will output different results because they will use different demosaicking, different color transforms, etc, etc…

reading data is just a preliminary part of “raw conversion” which actually starts after libraw code is done in raw converter

So it’s just demosaicing with a different algorithm.

there is NO demosaicking and that is the point - you do NOT need any demosaicking to execute a color transform and map raw data from one sensel ( one number, DN ) → color ( 3 numbers, “color” here as coordinates in some proper color space, with gamut, etc )… for example I can map ONE digital number straight to Y (normalized to the range) in CIE XYZ and assign X & Z to 0.0 … I am not even pretending that I am mapping 3 numbers (DN) to 3 numbers (coordinates)

It seems that I never understood what they said here :

wikipedia is written by whoever wants to write something and have time to do this … demosai(c/k,ck)(ing) and color transform are 2 separate stages … staring with understanding that input devices do not produce colors … color transform on data from the input device produces colors ( here colors = coordinates in a properly defined color space )… “incomplete color samples output” from that text is about incomplete sampling by a camera senor behind CFA of incoming illumination spectrum-wise because of those CFA filters… to attempt a “better” color transform to produce colors closer to reality ( = eye + brain ) from recorded data we need to combine samples from several sensels ( so that at each spatial data point within the image frame where we assigning color coordinates we have at least a guesstimate for the missing data )… but w/o demosaick we still can assign colors and still get an image ( that you in fact recognize what is in there , even the colors assigned to every sensel are not “ideal” - but we are not talking about what is “ideal” or “closer” to reality … )

Agreed. Wikipedia can often be useful as a starting point when research a topic, or for additional context. However, it generally should not be used as the primary or only research source when accuracy is the goal.

Mark

i did too but the XnViewMP did generate XMP files with a old/wrong file type i believe which didn’t worked out with DxOPL’s xmp reading/updating. Have you notised this?

this is my first culling atribute. i use now the colortags for burstsets and rating for 5 star extra good can be looking very good after editing and 1,2 for probably not worth editing. The side by side is a bit cumbersome/ not intuitive. But a very good rawfile previewer and it generates my XMP-file. ( i use Fast Stone image viewer for side by side elimination (can be used for raw also.)

(i often place the oocjpegs imidiately in a subfolder and let only the unique oocjpegs in the rawfolder. Those oocjpegs are only for preview on my Smarttv direct from my camera.)

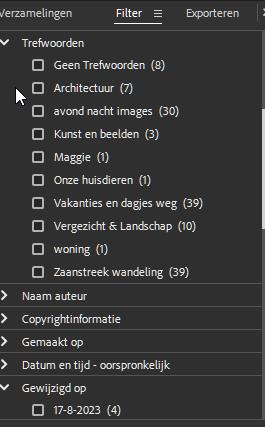

After the initial and first culling i open Bridge ( adobes free DAM isch software) to add templates of ICC entries and add keywords. ( i don’t like the UI of DxOPL’s keyword editer)

( and cull some more at second/third view)

From this point DxOPL is taking over.

Add some google GPS if needed and do a manual update XMP push( not automatic because i am afraid when something go’s wrong the hole image database is corrupted and all my XMP’s are bin wordy. ![]() So i only update those i want.

So i only update those i want.

If you are used to use LR i suppose most of this can be done in that library.

Photomechanic is as it seams very good but expensive.

library searching is DxOPL better then Bridge for instance: Bridge is only one folder searching so no tree’s of folder for selecting

What is very interesting inside Bridge you can create a project which opened automaticly the application you point to, in this case DxOPL and this creates a project inside DxOPL. Handy for cutting large amounts in bitable pieces ![]()

Use Bridge advanced search (upper right) and you can search the entire computer.

Criteria → Keywords