PL8 owner here, trialing PL9, testing out AI masks. Sometimes an AI mask looks fine in the first few seconds of real-time preview while I’m creating it, but then the final mask rendering has an added half-transparent jagged thick halo that makes it totally unusable. I have submitted a support request to DxO with the original RAW and the attached screenshot. Anyone also getting this?

Can you give us another example of the same effect. I just want to eliminate my feeling that those three shapes are part of the background aquarium.

I have made a few test myself to see if there is any difference in the behavior of the masking processes when it comes to halos using predefined AI-masks or doing it on free hand. Also how the mask is looking when marking an area (hoovering over the same subject and how the final mask looks.

This shows “freehand masking” when hoovering over the subject before selecting it. As you can see it differs clearly from the final mask.

This shows the final mask with the Freehand-method.

This shows how the mask is displayed with the premade AI-mask for “Animal” before it is applied. I it really looks exactly like the one above showing the “Freehand”-variant before selection is done. As you can see it differs clearly from the final mask. her too

The premade AI-mask for "Animal applied. I think it looks identical to the “Freehand”-mask above after that selection has been “click”-confirmed by the mouse.

I think this example is a good example of how good it really is. I think it handles the edges surprisingly good . especially the fine hair.

Sometimes it can be better to use the brushes when these types of masks fails since Photolab is lacking a function like “Refine Mask” like in Capture One.

I have seen and learned to like some of the recommendations from Youtube when it comes to handle the area where the subject and background meet.

Many users seems to have adopted the process of starting with masking the “Subject” or “Animal” one way or another for pictures like this. Then they copy the first mask and invert it to get a perfect edge between the two masks. That is often a good way to avoid problems with the edges between “subject” and background.

I must say I haven’t seen all that much of halo-problems with my pictures so far except when it comes to masking “skies” with AI so DXO has a job to do with refining the Sky-mask

This is how it looks just hoovering over the “Sky”-option in the menu.

The final Sky-mask leaves a lot to ask for.

I can also say that I will still prefer to use the method I have got used to with the “Freehand”-click select-method instead of using the premade AI-presets because it gives far better printing performance. When I have tested this earlier it was about five times faster to export than the other method. It seems to be the same relation between these methods even with the last driver for my GPU-card.

When I tried to export a picture of the same motif made by Freehand-masking with High Res.-previews and Deep Prime 3 rendering on and the same kind of editing made it took 15 seconds and it is what it use to take normally, so that is a very good reason for me NOT to use the premade AI-presets despite they work now.

Working as designed.

The “while you’re creating it” is a fast approximation of what will be selected. Once you select it, a more “accurate” mask is created, which takes a bit more time. If the accurate mask were always shown, the performance would be terrible.

As for the “extra bits” in the mask, that’s just part of how AI is working at this stage. The same happens in Lightroom. Try not to think of the masks as “magic one click” but rather as “an excellent start point”. If you do get the perfect mask in one click, that’s a bonus.

I regularly select birds in my photos and have to paint out some of the stuff they’re perched on. Also sometimes I need to brush in bits that are high contrast or similar to the background.

Yes I guess that is a good summary of what is happening. In general, i think masking precision is really good almost always. Very little normally to adjust I think even if a “Refine Mask”-tool like in Capture One would be good to have too,

What do you think of the tread owners’ pictures ghosted background? At least I have hard to see that as halos.

DXO responded to my support request and acknowledged the issue. They asked me for the original and additional examples, so I hope we’ll see a fix soon.

@zkarj I have no issue with the two-stage approach. My issue is that it doesn’t work as designed and fails in this example. The second (“accurate”) stage totally ruins the output of the first (“fast approximation”) stage, which was actually pretty good to begin with.

More concretely, my hypothesis is that in the first stage, the AI model performs a quick segmentation of the target subject, while the second stage is a separate algorithm that tries to refine it using some feathering/diffusion process.

In my photo example, the latter process went haywire and diffused the mask very far. The only thing that stopped it was a protective limiting mask that is basically a heavily-dilated version of the original rough mask from the first stage. That’s why you can clearly see the sharp jagged boundaries of the output mask at a large yet uniform distance from the actual subjects.

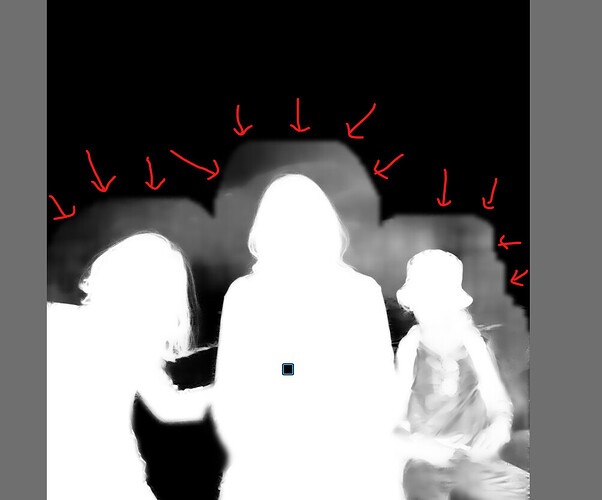

@Stenis I won’t post the original image without overlay due to privacy concerns. I can assure you that the halo is neither a part of the subjects nor the aquarium. It is a pure artifact. Here is the B&W mask display that shows it more clearly. I hope I put enough arrows so you’ll know what I’m talking about ![]()

BTW, this issue happens regardless of the chosen AI masking method - either area, selection, or predefined (“People” in this example).

I found that this issue mostly occurs in low-detail or low-contrast situations, which makes sense, as the feathering/diffusion process has less information to hold onto. In most other cases, AI masking works fine.

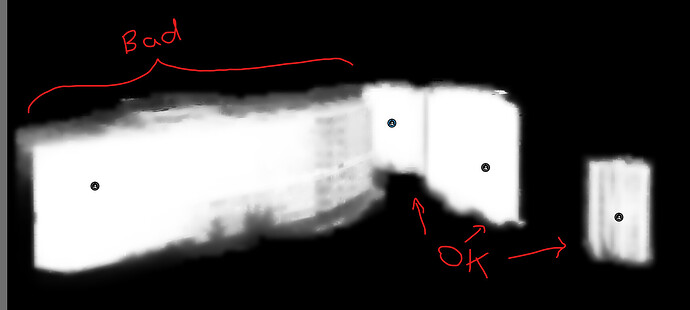

Here is another example. This is a strongly underexposed photo that I pushed in PL. I applied AI masks to the four foreground buildings as well as a “Sky” mask. Interestingly, the leftmost building suffers from the thick halo issue, whereas the other three are more or less OK. Ther sky also suffers from this issue in the right part of the image, where the distant hills have low contrast:

Noam, it is OK, I never asked for the “original”. I just asked for another example.

Thanks for the other examples. Now we all can see an understand.

Your issues are really disturbing and I guess hard to handle.

I guess we all have to be observant when shooting pictures like that. Is there by chans this can be related to type of GPU or soo? What did DXO respond?

Good news!

DXO support confirmed that my GPU was working properly. They acknowledged the issue and informed me that a fix will be released soon!