Yes they all worked but I was mostly interested in the one you wrote subsequently which I commented on and showed the report in action.

I appreciate the clarification.

Well, I have mentioned before that folks could contact me about beta testing it for a few years now but only a few responded and, unfortunately, they weren’t very forthcoming with feedback. In fact, I have found most bugs myself through my daily use.

That is because Windows doesn’t provide the amazing tools that Apple does, as part of the operating system, that can be accessed from their comprehensive (and free) development tools.

Oh and, after many years of using and developing for Windows, finishing with XP, I wouldn’t use a Windows computer if you gave it to me ![]()

Since I wanted to handle metadata, produced by other software as well as PhotoLab, I based it on either XMP based sidecar files or RAW files themselves - thus ensuring that any work my app did was available in any other software as well as PhotoLab.

Since I leverage Apple’s Spotlight metadata database that is constantly updated by the OS, this obviates the need for a third party database or proprietary sidecars.

Well, a long time ago, I did offer DxO the possibility of extending and incorporating my work but they weren’t too keen and, more than likely, don’t have the resources to port it to Windows.

One suggestion that I did make, both in these forums and privately, was to separate out the browser into a “plugin” but, once again, “no answer came the stern reply”

As a long time macOS and iOS developer, the idea of “hacking” the PL database was never going to be an option, since I know that (on macOS/iOS) the database structure is auto-generated from an Object-oriented model that is created using the CoreData designer provided as part of Xcode. Thus, to change the RDBMS structure, it is necessary to change the Object model and pass it through an ORM (object relational model).

Of course, DxO could replicate the ORM for Windows but that is no easy task, hence I can understand their reticence (so far, my project contains around 34,000 lines of code).

And, to cap it all, Microsoft does not provide the same, easily accessible, metadata management and search engine that Apple has had for many years.

As it is, someone using my software can take a RAW file with embedded metadata from one machine to another and all that metadata travels with it. And, if folks are not happy with embedding metadata in RAW files, then they can opt for using XMP sidecars instead. Either way, there is no need to mess around with reverse engineering DxO’s database.

No, as I don’t consider it polite to blatantly advertise, especially as I am still seeking beta testers rather than marketing it.

My point is that I’d rather not have you discuss your tool here. I don’t see a problem in posting a thread asking for beta testers, which would be more polite than hijacking other people’s threads.

Here are the Mac versions.

Get the Keyword Structure

Photolab doesn’t have any method of importing/exporting the keyword structure. The tools below only export your existing structure. I’ll give you three variants.

Version 1: Get the basic structure only

/*

* Create a table of all keywords. Every keyword is written using a

* full path. The results are in alphabetical order.

*/

WITH RECURSIVE

/*

* Create a table that matches keyword IDs to the full path of the

* keyword it is associated with.

*/

fullKeywordPath AS (

SELECT Z_PK, ZTITLE, ZPARENT, ZTITLE AS path

FROM ZDOPKEYWORD

WHERE ZPARENT IS NULL

UNION ALL

SELECT ZDOPKEYWORD.Z_PK, ZDOPKEYWORD.ZTITLE, ZDOPKEYWORD.ZPARENT, fullKeywordPath.path || '|' || ZDOPKEYWORD.ZTITLE

FROM ZDOPKEYWORD, fullKeywordPath

WHERE ZDOPKEYWORD.ZPARENT = fullKeywordPath.Z_PK

)

SELECT path FROM fullKeywordPath ORDER BY path

Version 2: Get the basic structure and counts

This version just adds an image count to each keyword. The count should match what you see when you view keywords in Photolab. It runs a lot slower than the version above.

/*

* Create a table of all keywords. Every keyword is written using a

* full path. The results are in alphabetical order. Each path is

* followed by a count of the number of items associated with that

* keyword. Getting the count slows the query a bit.

*/

WITH RECURSIVE

/*

* Create a table that matches keyword IDs to the full path of the

* keyword it is associated with.

*/

fullKeywordPath AS (

SELECT Z_PK, ZTITLE, ZPARENT, ZTITLE AS path

FROM ZDOPKEYWORD

WHERE ZPARENT IS NULL

UNION ALL

SELECT ZDOPKEYWORD.Z_PK, ZDOPKEYWORD.ZTITLE, ZDOPKEYWORD.ZPARENT, fullKeywordPath.path || '|' || ZDOPKEYWORD.ZTITLE

FROM ZDOPKEYWORD, fullKeywordPath

WHERE ZDOPKEYWORD.ZPARENT = fullKeywordPath.Z_PK

)

SELECT

path,

( SELECT COUNT(*) FROM Z_8KEYWORDS WHERE Z_10KEYWORDS = fullKeywordPath.Z_PK) AS count

FROM fullKeywordPath

ORDER BY path

Version 3: Get the basic structure in Adobe Bridge format

Adobe Bridge can import keyword structures. They use tabs to indicate the level of each keyword.

/*

* Create a table of all keywords. If each row of the table is

* converted into a line in a file, the file can be imported into

* Adobe Bridge to create the same structure.

*/

WITH RECURSIVE

/*

* Create a table that matches keyword IDs to the full path of the

* keyword it is associated with. Create a prefix that consists of a

* tab for each level of depth of a keyword, with the top level

* being level 0.

*/

fullKeywordPath AS (

SELECT Z_PK, ZTITLE, ZPARENT, ZTITLE AS path, "" AS prefix

FROM ZDOPKEYWORD

WHERE ZPARENT IS NULL

UNION ALL

SELECT

ZDOPKEYWORD.Z_PK,

ZDOPKEYWORD.ZTITLE,

ZDOPKEYWORD.ZPARENT,

fullKeywordPath.path || '|' || ZDOPKEYWORD.ZTITLE,

prefix || char(9) AS prefix

FROM ZDOPKEYWORD, fullKeywordPath

WHERE ZDOPKEYWORD.ZPARENT = fullKeywordPath.Z_PK

)

SELECT prefix || ZTITLE AS name

FROM fullKeywordPath

ORDER BY path

Find Hierarchy Problems

When you tag an image with a keyword, you can set up a preference that ensures that all parent keywords are also tagged. Drag-and-drop changes and other things (an accidental cursor click) can screw up this scheme.

The code below will output any image name/keyword path pairs that should be enabled to maintain a complete hierarchy path, but aren’t. It also lists a UUID.

The UUID can distinguish between an image and a virtual copy. You might have a problem in some and not others. It’s tough to match a UUID to a specific image/virtual copy; if the tool reports problems with an image name/keyword pair and the image has one or more virtual copies, then check them all.

This script can be very slow if you have a lot of tagged images. I just ran it and it took 6 seconds to display any results. If it says “0 rows returned”, then there are no problems.

/*

* Get a table of each item/keyword pair that should be in

* Z_8KEYWORDS in order to have complete hierarchies but is not. The

* information is returned as filename and keyword path.

*

* The result rows also include a UUID. Each source file has a main

* image and one or more virtual copies. Each is uniquely identified

* by its UUID. If the same filename/keyword path appears more than

* once, then the UUIDs will be different. This indicates there is a

* problem in more than one version of the image. If a file/keyword

* path appears only once, but there are virtual copies of the image,

* then only one of the versions has a problem, but you will have to

* check them all to see which has the problem.

*

* This may take a few seconds to run. If everything is OK, it should

* return 0 rows.

*/

WITH RECURSIVE

/*

* Create a table that matches folder IDs to the full path of the

* folder it is associated with.

*/

fullFolderPath AS (

SELECT Z_PK, ZNAME, ZPARENT, ZNAME AS path FROM ZDOPFOLDER

WHERE ZPARENT IS NULL

UNION ALL

SELECT ZDOPFOLDER.Z_PK, ZDOPFOLDER.ZNAME, ZDOPFOLDER.ZPARENT, fullFolderPath.path || '/' || ZDOPFOLDER.ZNAME

FROM ZDOPFOLDER, fullFolderPath

WHERE ZDOPFOLDER.ZPARENT = fullFolderPath.Z_PK

),

/*

* Create a table that matches keyword IDs to the full path of the

* keyword it is associated with.

*/

fullKeywordPath AS (

SELECT Z_PK, ZTITLE, ZPARENT, ZTITLE AS path

FROM ZDOPKEYWORD

WHERE ZPARENT IS NULL

UNION ALL

SELECT ZDOPKEYWORD.Z_PK, ZDOPKEYWORD.ZTITLE, ZDOPKEYWORD.ZPARENT, fullKeywordPath.path || '|' || ZDOPKEYWORD.ZTITLE

FROM ZDOPKEYWORD, fullKeywordPath

WHERE ZDOPKEYWORD.ZPARENT = fullKeywordPath.Z_PK

),

potentials AS (

SELECT Z_8ITEMS, Z_10KEYWORDS, ZPARENT FROM Z_8KEYWORDS

JOIN ZDOPKEYWORD ON ZDOPKEYWORD.Z_PK = Z_8KEYWORDS.Z_10KEYWORDS

UNION

SELECT potentials.Z_8ITEMS, ZDOPKEYWORD.Z_PK AS Z_10KEYWORDS, ZDOPKEYWORD.ZPARENT FROM potentials

JOIN ZDOPKEYWORD ON ZDOPKEYWORD.Z_PK = potentials.ZPARENT

)

SELECT

( SELECT path FROM fullFolderPath WHERE fullFolderPath.Z_PK = ZDOPSOURCE.ZPARENT ) || '/' || ZDOPSOURCE.ZNAME AS filename,

( SELECT path FROM fullKeywordPath WHERE fullKeywordPath.Z_PK = ZDOPKEYWORD.Z_PK ) AS keywordpath,

ZDOPINPUTITEM.ZUUID

FROM potentials

JOIN ZDOPINPUTITEM ON ZDOPINPUTITEM.Z_PK = potentials.Z_8ITEMS

JOIN ZDOPKEYWORD ON ZDOPKEYWORD.Z_PK = potentials.Z_10KEYWORDS

JOIN ZDOPSOURCE ON ZDOPSOURCE.Z_PK = ZDOPINPUTITEM.ZSOURCE

WHERE NOT EXISTS ( SELECT 1 FROM Z_8KEYWORDS AS ik WHERE ik.Z_8ITEMS = potentials.Z_8ITEMS AND ik.Z_10KEYWORDS = potentials.Z_10KEYWORDS )

ORDER BY filename, keywordpath

Find Images Without Any Keywords

This script was not part of the OP, but was added as a request. It outputs a list of all images that have no keywords.

/*

* Get a table of image files which have no keywords assigned.

*

* The result rows also include a UUID. Virtual copies of an image

* will have the same filename but different UUIDs.

*

* This may take a few seconds to run. If everything is OK, it should

* return 0 rows.

*/

WITH RECURSIVE

/*

* Create a table that matches folder IDs to the full path of the

* folder it is associated with.

*/

fullFolderPath AS (

SELECT Z_PK, ZNAME, ZPARENT, ZNAME AS path FROM ZDOPFOLDER

WHERE ZPARENT IS NULL

UNION ALL

SELECT ZDOPFOLDER.Z_PK, ZDOPFOLDER.ZNAME, ZDOPFOLDER.ZPARENT, fullFolderPath.path || '/' || ZDOPFOLDER.ZNAME

FROM ZDOPFOLDER, fullFolderPath

WHERE ZDOPFOLDER.ZPARENT = fullFolderPath.Z_PK

)

SELECT

( SELECT path FROM fullFolderPath WHERE fullFolderPath.Z_PK = ZDOPSOURCE.ZPARENT ) || '/' || ZDOPSOURCE.ZNAME AS filename,

ZDOPINPUTITEM.ZUUID

FROM ZDOPINPUTITEM

JOIN ZDOPSOURCE ON ZDOPSOURCE.Z_PK = ZDOPINPUTITEM.ZSOURCE

WHERE NOT EXISTS ( SELECT 1 FROM Z_8KEYWORDS AS ik WHERE ik.Z_8ITEMS = ZDOPINPUTITEM.Z_PK )

ORDER BY filename

These were tested on a small test database sent by @platypus. Let me know if you run into any problems using them.

Please don’t take what I am about to say as being in any way harsh, but my main concern with all this SQL “stuff” is that it doesn’t interact directly in any way with either PhotoLab or any other app, without an in depth knowledge of BASIC, scripting, using the console and generally messing around manually with the file system.

I would like to ask a question - how do I use it to find images that match a keyword search term and then open those images in PhotoLab?

I referred to my app because, from the average photographer’s point of view, it allows them to simply enter keywords (including in hierarchies) in a lookup list and manage them visually using drag and drop, add those keywords to images (without the requirement to use the PL database), transfer those files to any other app and then use the keyword list to populate searches. You don’t even need to use PL. Once the search returns, it is a simple matter of double clicking on any of the included images to open them in PL.

But, whatever you do or don’t do, don’t use PL to modify metadata that is managed in an external app, even mine. That is one sure way to mess everything up.

As for the PL database, I don’t even use it and, in fact, regularly delete it after changing the structure of my file storage.

Why am I so insistent on avoiding using the database for metadata? Because instead of using SPOD (single point of definition) PL manages to conflate multiple definitions in…

- the database

- DOP files

- XMP files

- it even reads keywords directly from RAW files.

As opposed to the sensible way of having a SPOD in either the original file or an XMP sidecar.

my main concern with all this SQL “stuff” is that it doesn’t interact directly in any way with either PhotoLab or any other app

My thread is about extracting useful info from PL using SQL (since PL provides no formal hooks to its internal workings). The tools I have provided so far all deal with keywords.

If your keyword system is external to PL, then the tools are of no use to you. If you use PL’s database for keywording, then they are. Arguments about which approach is superior are irrelevant in this thread. If you’d like to argue about the merits of one approach vs. another, please start your own thread on the topic.

My thread is about extracting useful info from PL using SQL

Might I ask for what purpose? How do you intend that folks make use of what they have extracted?

The tools I have provided so far all deal with keywords.

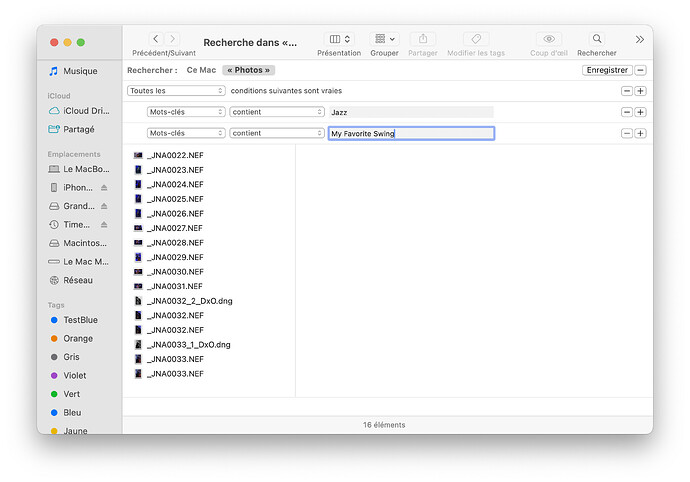

But Mac users don’t need such tools since Finder, with its Spotlight search mechanism can find files that match multiple keywords all on its own…

Or, just press Cmd-I on a file to see what keywords it contains…

If you use PL’s database for keywording, then they are

Unless you use XMP sidecar files for metadata. In which case, both PL and a majority of other tools can access them without any reference to the database, which, as I said before can cause confusion.

Finally, how would you move the metadata to keep it in sync when you move images?

Might I ask for what purpose? How do you intend that folks make use of what they have extracted?

Every script describes its purpose. People can use the information for whatever they want.

Nothing else in your post is relevant to this thread. You can DM me if you want to discuss these side issues with me.

Here are the Mac versions.

Thank you @freixas

I tested the sqls against a full import of the images that I have in Lightroom.

All sqls worked without errors.

Comparing the V1 export to Lightroom’s direct export, I found that DPL’s database is a few dozens of keywords short…due to files that DPL can’t or won’t read while indexing.

As of now, the sqls are informative and can serve to check indexing, e.g. in the case of exchanging DPL and LrC. Also, the lists could help to e.g flatten a hierarchical list of keywords (and vice versa), but after all, having a list does not link the keywords to the respective images. For that, we still need to fully import (or index) the respective photo archive and then, the keywords will be read, catalogued and, most importantly, linked to the respective images.

My current Macs index about 1000 images per minute and a direct DB-transfer would be much faster and comfortable … if only DxO (and Adobe) provided and maintained such services. I’d wonder if any such possibility were provided by whoever is bold enough for this (fools?) errand.

… well, to my experience around this DXO Photolab has been the only one of Lightroom, Capture One and DXO Photolab that has worked seamlessly and fleawlessly together with both PhotoMechanic and iMatch, without any need of “manual synchronizations” like use to be the case with both Lightroom and Capture One despite switching on “Synchronize metadata with sidecar files”.

You also have to decide if you want to use just the plain keywords or the whole structures iin the same screen

I think DXO have to thank Freixas for his initiative to publish this little SQL hack. So now when he also have made this job for DXO, we finally might get a function in place that makes it possible to import and export the keywords used in Photolab. This is a minimum for a serious implementation of a Photo DAM to be able to exchange used vocabularies between different platforms using XMP.

So dear DXO, see this wasn´t really harder than this because the SELECT-operator or what I shall call it is really the first you learn to use when starting to learn SQL. It is the first in the very first basic course!!

When I migrated from PhotoMechanic (that is a more serious and professional tool than Photolab) I could easily export all the keywords I had used during four years both in PM Plus and synchronized to Photolab without the slightest problem and import them into iMatch 2025. Even Lightroom and Capture One have functions like that. BUT, the way DXO Photolab works today it just give the users support to migrate TO Photolab but not FROM Photolab and that is the very definition of DXO trying to “locking in” their user base and it doesn´t look nice at all, sad to say. Or maybe it is as sad as DXO just totally have lost the interest in completing PhotoLibrary, so it can be a decent player in the XMP-society?

Even Freixas is excusing DXO - being a small company with limited resources - I don´t. Have you seem what Mario Westphal at Photools (one man company) has been able to do totally on his own - just one single developer - with iMatch 2025?? We have just had a discussion in Sweden about why neither the multibillion company Adobe that still owns the graphics applications market or CameraBits that developes the industry standard Photo-DAM-application PhotoMechanic were the ones that finally managed to solve the big efficiency problems with populating the IPTC/XMP-Descriptions and IPTC/XMP-Keywords (both flat and hierarchies) that we have had for decades?

You didn´t know about that?

This has very little to do with how big economical muscles a company has. In fact it has very little to do with muscles at all. It is all about brains, levels of skills and devotion to the cause of building the best personal DAM-system there is to day on the market. Se and learn DXO, CameraBits and Adobe!

I tested the sqls against a full import of the images that I have in Lightroom.

All sqls worked without errors.

Thanks for the reply.

As of now, the sqls are informative

As long as you limit operations to read-only, informative is the best you get. The output from the SQL can be further manipulated for various uses, through spreadsheets or other methods, but it’s still just information—the value being that it is information that PL doesn’t provide.

I’d wonder if any such possibility were provided by whoever is bold enough for this (fools?) errand.

It’s possible and it’s the kind of thing I could do if I had copies of both programs and access to whatever database they used. But giving away tools that write into databases not directly under one’s control is a risky proposition.

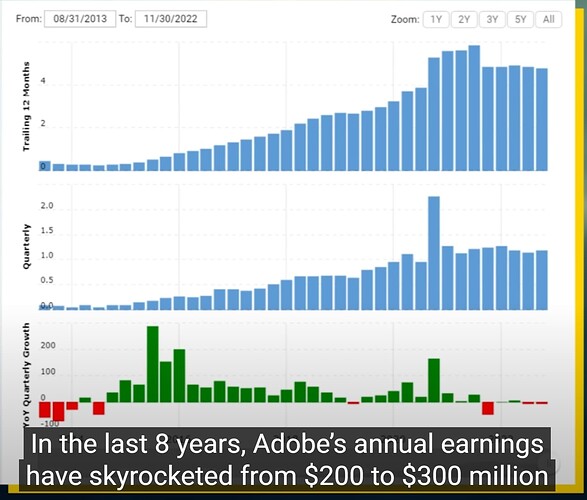

Do we really believe that Adobe would have to raise prices out of financial necessity, just because they added some new AI features??

The truth is that Adobe has actually underperformed for a really long time since the subscription model was introduced, because their calculations soon discovered that the all-time high would be even higher if they weren’t so eager to plow down everything they earned in R&D. Why strain yourself when you don’t have to strain yourself anymore to motivate people to upgrade - the money just keeps pouring in anyway. As for the fact that the AI support in Lightroom would have justified sharply increased prices, I don’t believe that either. On the contrary, there are those who now point out that Adobe has lagged behind in that development and that it has also played out in a weak share price. There are those who see Adobe as technically a bit on the decline now.

I myself ran Lightroom from the very beginning, from version 1.0 to 6.14, when I migrated to DXO Photolab, and a little later I also started using Capture One in parallel. The reason for this was Lightroom’s relatively abysmal previews with my Sony RAW and that the program lacked the support for tethering for Sony that Capture One had. Photolab still provides clearly better image quality than Lightroom through Deep Prime, and Capture One is clearly more competent in many ways. Lightroom still lacks the efficiency and productivity focus that, for example, Capture One has had for a long time now. I find it difficult to understand that Lightroom is still seen as some kind of industry standard. In my eyes, it only testifies to the indifference of ignorance.

I once started trying to build an XMP metadata-controlled image library with Lightroom’s catalog, but it was completely hopelessly inefficient, so I gave up on that project for a long time. Much later, I invested in the then most effective metadata maintenance program on the market and Photo DAM PhotoMechanic Plus 6, just when it was released on the market and I have been using it until a few months ago when I stumbled upon iMatch 2023.

Then iMatch 2025 happened, which completely changed the conditions. Although PhotoMechanic Plus 6 was already running circles around Lightroom, the program has a major weakness and that is that despite all the mass processing functions available, image descriptions (IPTC Descriptions) and search keywords (IPTC Keywords) usually have to be entered completely manually. This reduces all productivity in the workflows enormously and it is really a pain for everyone other than professional sports photographers in the USA because there are companies that specialize in producing “search and replace” lists with lineups of the players participating in these matches. But that doesn’t help ordinary photographers like me very much. We are still left with Descriptions and Keywords to write.

Neither Adobe with all its forced subscription billions nor the market-leading 20-year-old CameraBits with its PhotoMechanic Plus DAM that has been developed at a snail’s pace have managed to solve these problems. CameraBits’ innovation rate has been so low that they have not managed to get a single version upgrade until now - it took over four years. Through this, they have finally incurred such serious financial problems that they have ended up in the arms of venture capital.

Personally, I am quite amazed that it was a very small but very competent and efficient German ONE MAN COMPANY called Photools, that finally solved the hopeless productivity thief for me and many others that our manual handling of image descriptions and keywords was and did so already in the version that came out already in 2023. The problem then was that the AI models were not quite where they are today, but you could already see where we were going and that is what made me start a test migration to iMatch and not the price increases.

However, it was only now, just a month ago, when the development of the market-leading AI models from OpenAI and Google resulted in the launch of the versions Open AI 4.1 and Google Gemini 2.0 Flash, which really lifted everything and then fell into place. These models were then made available in just a week or so in the then newly launched iMatch 2025 by developer Mario Westphal at Photools and this has in my eyes made both PhotoMechanic and all the built-in so-called Photo DAM in all our most common RAW converters appear as antiquated technical history from another time - which they actually are now.

Don´t listen to the excuses of Adobe! Mario Westphal and his small company pay nothing at all for his customers to have access to image interpretation via AI from Google, OpenAI, Microsoft or Mistral. There are even a number of completely free AI models that run completely locally via interfaces such as Ollama and LM Studio. Nor does he pay for the use of commercial maps and position data with reverse geocoding from Google, Microsoft or HERE - because the users always do that. Here too, free alternatives such as OpenStreetMap and others are also offered, so in that context Adobe’s arguments seem quite hollow.

Photools has thus made two important design choices here:

The first was to make a common AI interface available to all these different AI services, whether they are commercial or non-profit. (In cases where they are non-profit, you are only asked to send a little money to the organizations whose free services you use. Users have a choice to make and can register themselves with AI providers - if necessary to get the so-called AI API keys that are needed for the services to be available in iMatch).

The second was to build an application - Autotagger - that offers an efficient interface to, among other things, be able to control these AI services via four different so-called Prompt interfaces, three “static” ones for Descriptions, Keywords, Landmarks and then finally there is also an “Ad hoc interface” to be able to quickly adapt the control to the images you want to influence on the next run.

It is also the case that the cheapest and now very good commercial services are very affordable. No one needs to be ruined by those costs either. With OpenAI GPT 4.1 Mini, 1 million so-called Tokens (everything is paid in Tokens) cost 0.45 USD. It takes about 1,000 to process an image, so it costs just over 4 kronor for 1,000 images. If you also have a lot of images that could use the same text, only 1,000 Tokens are enough for any number of images.

So if you want to run “for free” using LM Studio or Ollama, you can process data locally, for example, with Google Gemma (4GB or 12GB), but it requires at least 8GB to 12 GB on a good graphics card and it can cost a lot. However, if you choose to run commercial models, the graphics cards are less of a problem and then you can use much larger and much more competent models from AI providers, and it often makes a big difference, for example, when it comes to identifying so-called “Landmarks” in the images.

However, both Gemini Flash Lite and OpenAI 4.1 Mini are very fast and surprisingly good so these are almost always enough and I have clocked that it took 1 minute and 45 seconds to process 250 images which makes less than 7 minutes to process 1,000 images and provide them with captions and keywords of such a quality that very little or no additional manual work is normally needed. The smaller models are significantly faster than the larger ones. It is this productivity gain that you should put against doing this in principle manually.

This thread is basically about costs and the funny thing is that little Photools does NOT use any subscription model but now charges about 1700 for a perpetual license. If you buy one now from the venture capital at CameraBits, a PhotoMechanic Plus 6 now costs about 5200:- for a perpetual license. That is three times more and Adobe probably wants about a similar amount iMatch costs for eternity - every year. Capture One is even more expensive, but in that case they’ve actually kept up a decent pace of innovation so far, so that’s OK with me. I never let myself be guided by what a software costs, but by what it can do for my productivity.

I still don’t understand why neither a billion-dollar company like Adobe nor a niche market-leading Photo DAM company like CameraBits, whose PhotoMechanic is used by basically all sports and event photographers, at least in the US, have managed to solve the productivity problems that the manual processes around Descriptions and Keywords have created, despite the users’ explicit requests in “feature requests”.

I also don’t understand how a small one-man company like Photools, which has existed for 20+ years and has managed to financially upgrade version after version, has managed to solve what neither the world’s largest graphics application company nor the world’s leading Photo DAM manufacturer CameraBits has so far failed to do. I have actually read the CameraBit forum myself and for that reason I know that these AI functions are really in demand by photographers who value their time and don’t just stare blindly at a possible subscription cost.

The future will open up many new opportunities for photographers and creators who have already understood how to use AI to their advantage and for once it will probably not be the costs that limit but the creativity in the AI prompts. Because if a system like iMatch and all other AI systems are to really come into its own optimally, it must be controlled and this applies to all AI. The photographers who first understand this and embrace the new possibilities without prejudice will have a major competitive advantage in the market over those who do not. Those who are waiting for the “AI -hype” to blow away will probably be disappointed, because now even the teh biggest industrial group associated with the Wallenberg family are also preparing to lay the foundation for an AI infrastructure that can drive their industries’ future AI applications together with NVIDIA.

has very little to do with muscles at all. It is all about brains

…and the notion of “what our customers need” and the respective tendency to ignore the customers, “they keep paying anyways” …

Take people like Dave Coffin, Phil Harvey and others. Their devotion has moved more ground than many a Dollar.

write into databases not directly under one’s control is a risky proposition.

What’s the risk? Having to do something for the rest of your natural live while your users yell at you because the new release was due out 7 hours ago - you know what I mean. Fool’s errand. Bottomless vat, Sisyphus. Greetings from the hamster-wheel.

What’s the risk?

I meant the risk to me. I could write code to transfer PL to Lr and back, but it’s risky since I don’t control either tool. Read-only SQL scripts aren’t going to hurt anything.

No, Frexas is right about this. Running a relatively simple SELECT query like this won´t harm anything in the database. If I understood Frexas his point is just to expose how simple it is to extract these keyword data from this database even if they happen to be structured.

Would it be too much to humbly ask DXO for an appropriate export-interface for this SELECT-query Freixas just have helped them with. All the competition has a function like that so it is really basic in the XMP-tool world.

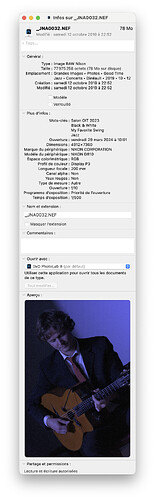

Can I just reiterate, an export mechanism already exists, in the form of XMP sidecar files. When it comes to metadata, there is absolutely no need to use the database.

Why should DxO spend time and effort reinventing that particular wheel?

…and the notion of “what our customers need” and the respective tendency to ignore the customers, “they keep paying anyways” …

Take people like Dave Coffin, Phil Harvey and others. Their devotion has moved more ground than many a Dollar.

I also very much like Ed Hamrick that is the father of Vuescan and the one man company Hamrick Software - also an industry standard software since decades for scanning, that is said to have a user base of 600 000 world wide and support more than 6000 scanner models.

Ed Hamrick also gives the same type of totally devoted support for his customers as Mario Westphal at Photools. So, if one-man-companies like Photools and Hamrick Software can both develop world class software AND run a world class support for that software, why can´t companies with far far more resources do the same and why do we continue to excuse them when they don´t??

No, Frexas is right about this. Running a relatively simple SELECT query like this won´t harm anything in the database. If I understood Freixas his point is just to expose how simple it is to extract these keyword data from this database even if they happen to be structured.

My point was to provide a tool that PL lacks. I wasn’t “exposing” anything…

For DxO, the feature is not quite as simple. It requires capturing the SQL in code, writing the GUI, developing regression tests, etc. Compare to a lot of stuff, it’s relatively simple, but it’s not one-SQL-script simple.

There is a “Feature Request” forum that is appropriate for suggesting features you think are missing from PL. This particular feature may already be in the requested pile; if so, vote for it.

Can I just reiterate, an export mechanism already exists, in the form of XMP sidecar files. When it comes to metadata, there is absolutely no need to use the database.

For performance reasons, I don’t keep the database and keywords in synch. I do have my personal tool to synch the files should I find it useful (PL is the master). You are welcome to choose a different way of working.