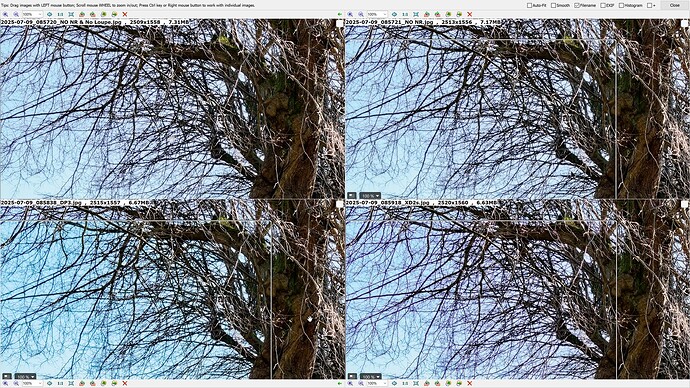

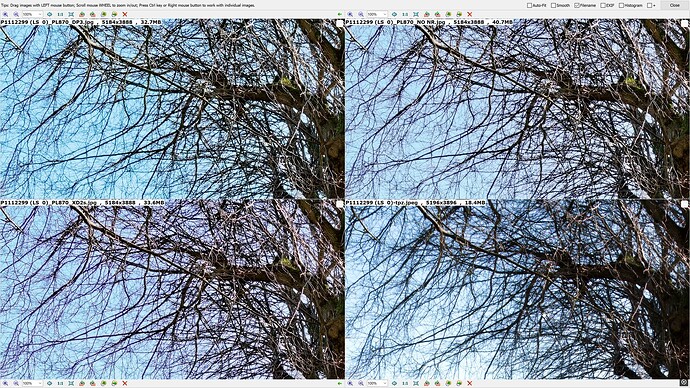

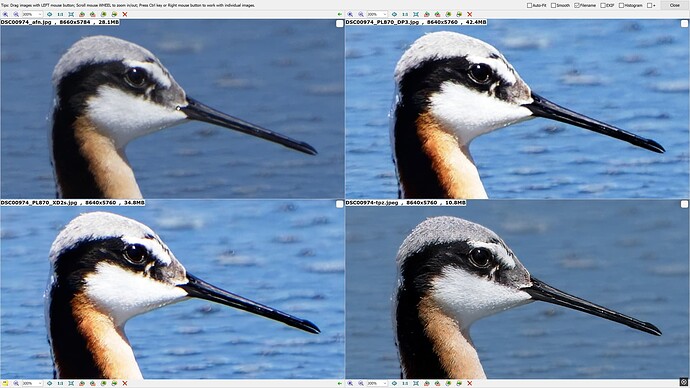

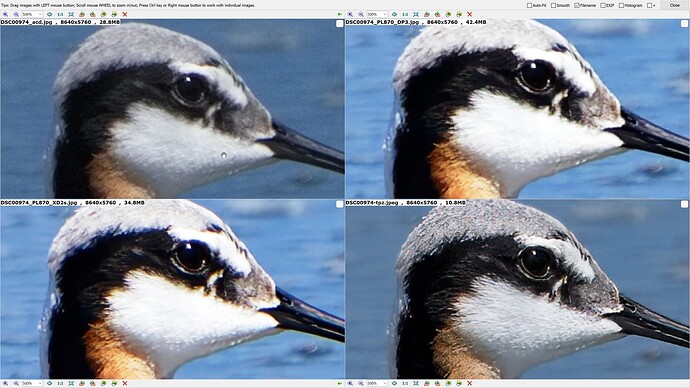

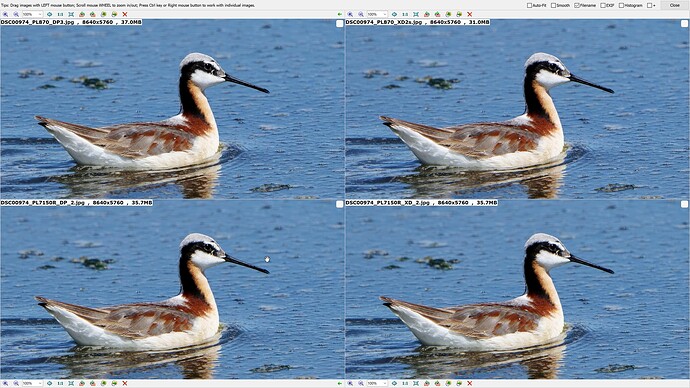

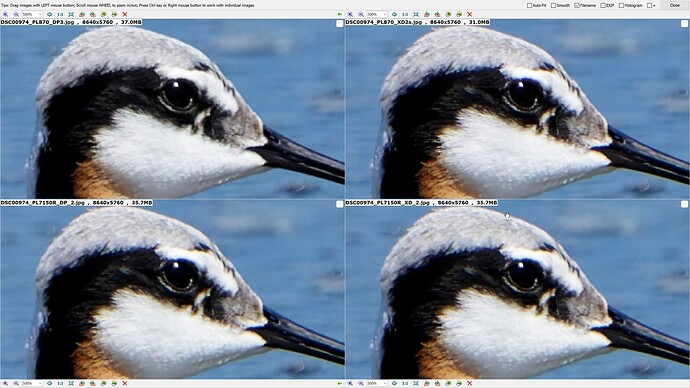

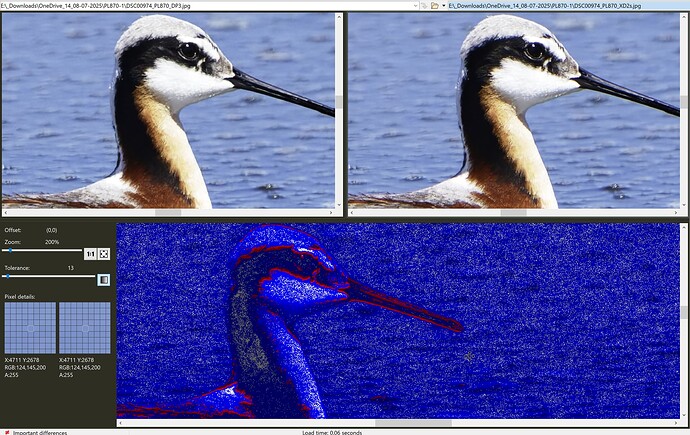

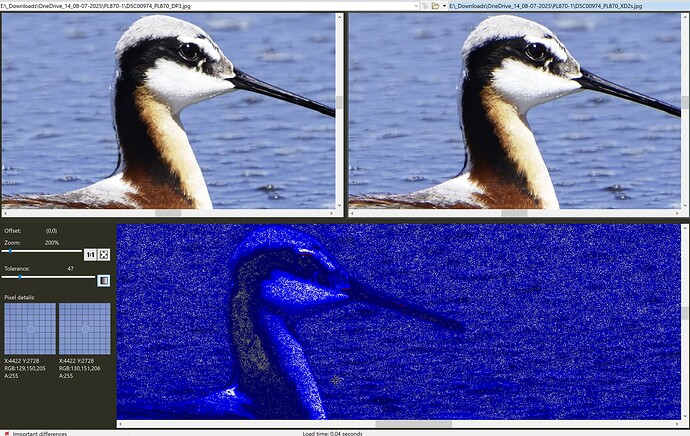

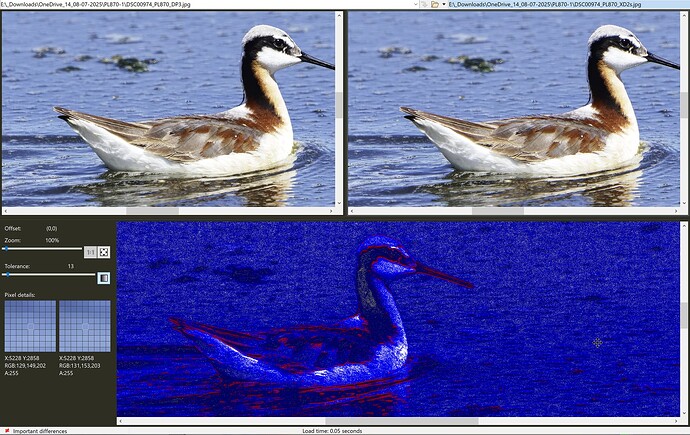

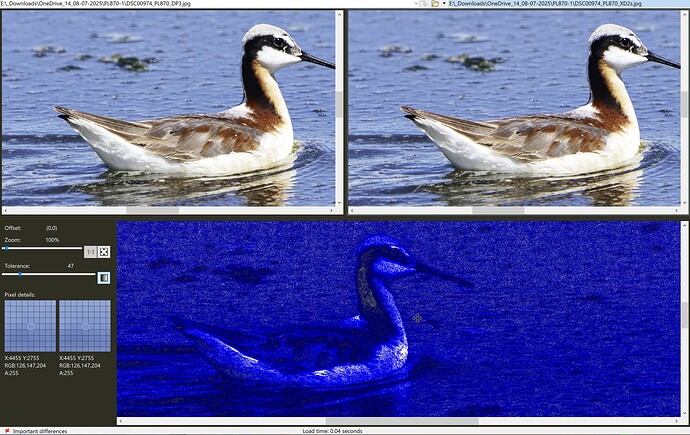

@platypus I have used it in the past to identify if one image output matches another and where it may differ. With different noise reduction schemes then some of what you see is the noise but shown as a difference between the images.

Typically I use it for text and the tolerances are at the left hand side of the screen, i.e. no tolerance whatsoever.

I forgot to use it earlier, which was another silly error, the video I recorded showed BC starting at a tolerance of 76 and moving quickly down to the lowest tolerance and then going back up the scale one step at a time.

What it shows, in this case, is the “loss” of colour that you, I and some other users saw (@Wolfgang ) and also the differences that we can’t see (and “what the eye can’t see the mind shouldn’t worry about”).

It is just a another tool that can be used to verify a “hunch” and not needed on a routine basis.

@platypus I know that you are also a Beyond Compare user and thank you for your description of the denoising process.

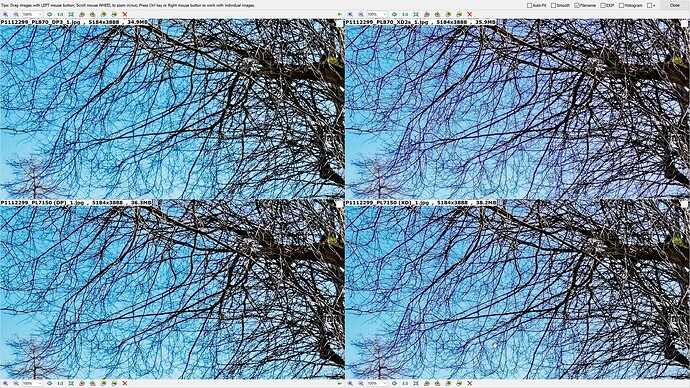

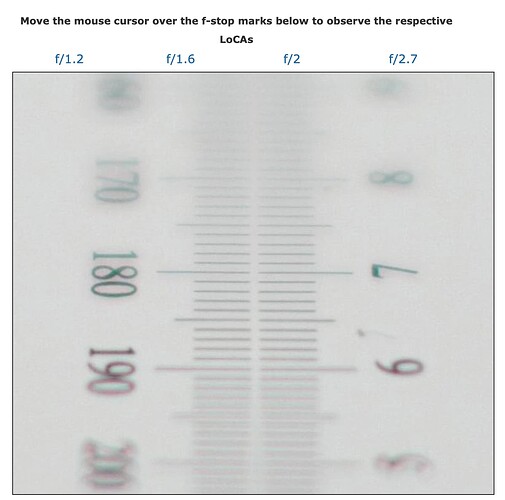

But that doesn’t change my suspicion that rather than provide a better CA tool for the user, the current one is lamentable (in my opinion) but how a better CA tool (which is definitely needed) that is going to counter the fact that XP XD2s is actually adding CA to the image I am not sure.

From what has been written it appears that the DP3 de-noising model has been trained to reduce CA, whether that is lens CA or the CA that the XD2s model was adding is unclear.

My suggestion is that the loss of the colour fringe in the OP’s image is actually “fall-out” from the revised noise reduction model in DP3.

AI models are the result of training, typically on vast number of images but can such images “bias” that training, intentionally or unintentionally.

If we are to pursue this line of inquiry any further we need some images where reddish fringes occur naturally and see what DP3 does to them.

I do believe it is a bug created by the intent of DP3 to resolve certain issues in XD2s and XD didn’t do a much better job either but adds less CA to my images