@RvL your statement is correct but that means all the hardware, CPU and GPU. I have written numerous times that there should have been another test undertaken of times to render the image without any Noise reduction which would allow the largest CPU element of the export process to be removed from the timings!

There is still CPU involvement in the DP and DP XD processes but the largest element of those operations is down to the power or otherwise of the GPU.

So for normal work in DxPL the CPU is a key element to get the image rendered and on the display as editing takes place, the GPU connected to the monitor that is displaying the PhotoLab workspace plays a minor part according to my tests!

In fact in my case it plays no part unless I move the PhotoLab display to one of my 1920 to 1200 outboard displays, i.e. I have three connections to three monitors one 2560 x 1440 from the onboard GPU, another from onboard to one 1920 x 1200 display and the other from the Discrete graphics card.

At no point does the discrete GPU enter into the real processing of the image on a Windows machine until the image is exported with DP and DP XD.

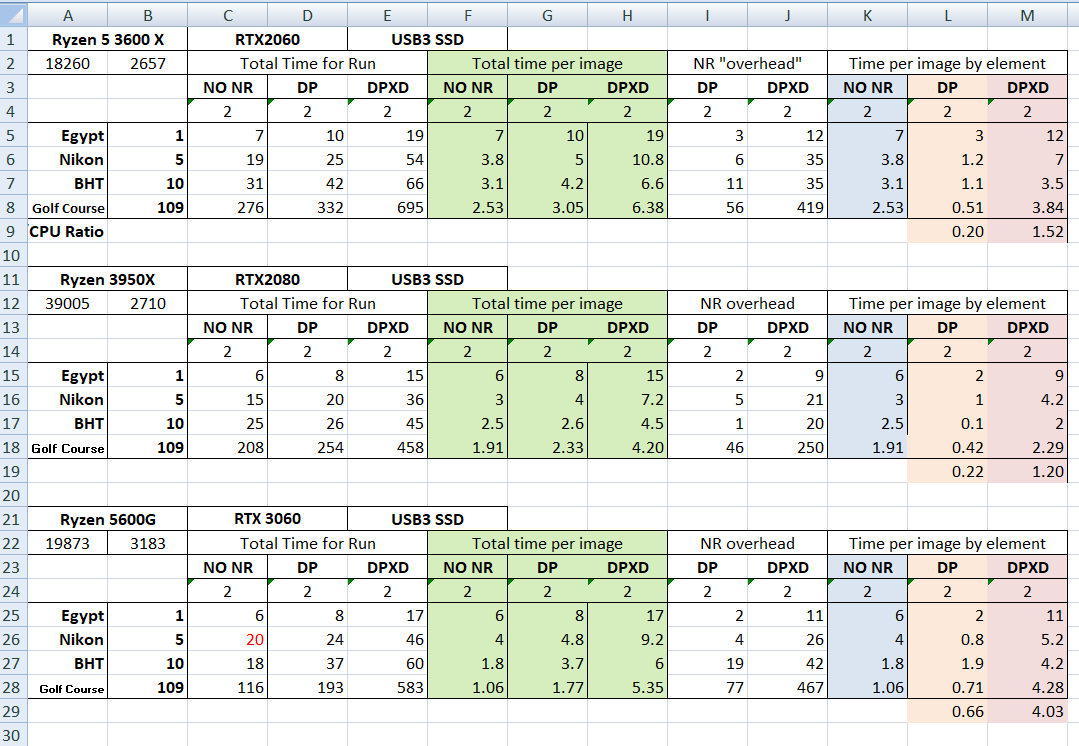

So in my opinion the Google worksheet is not completely useless but flawed since all the times shown include a contribution from the CPU and from the GPU in the figures!

Hence, I have been showing tables like this (comparing the times from my Grandson’s machine, with my Son’s machine with the machine I finally opted to build but with a motherboard that could take a Ryzen 5900X or 5950X for an additional £250-£300).

that seek to show what elements use what processor (CPU or GPU) in the export process.

I believe that for the export process alone something from an RTX 3060 upwards offers a good return on investment but I also believe that the further you go upwards from the RTX 3060 the less additional “bang for your buck” you seem to get, i.e. the 2080 cost more double (treble!?) the 2060 when my Son built his and then my Grandson’s machine but for NR processing (DP and DP XD) it does not show a good return on investment!

However, every second saved represents 1,000 seconds if you are frequently processing and exporting 1,000 images!?

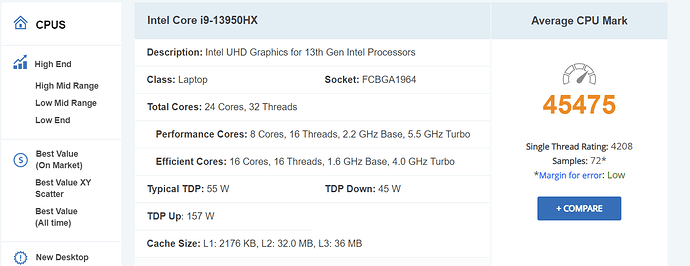

Looking at the machine you quote the passmark is

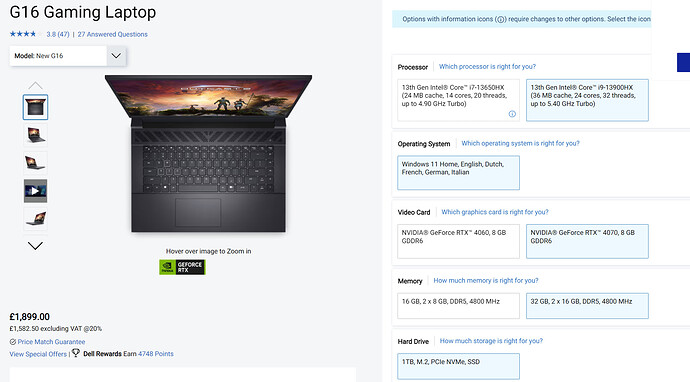

and at a guess you are looking at

or something similar which likely exceeds my son’s machine shown in the table above (the 3950X with an RTX2080). Please remember, as stated in another post above, that a laptops GPU is slower than the same “model” in a desktop machine, a figure of 20% slower is often quoted.

I hope this helps!