I’ve read through a number of topics and the general consensus is not to have large amounts of images in a folder, what I’m wondering is if it’s possible to stop Photolab counting through all images in a folder each time I start up the software and open the folder. I’m not familiar with how Photolab works but I’m assuming it’s looking for changed files? I’d rather set it to sync manually when I’ve added files so I don’t have a long wait each time I go into the folder.

Welcome to the forum, @Johnmcl7

PhotoLab checks files for changed metadata and applies the default preset, the first time it sees the images. There is no switch to stop DPL from doing this…and that is why it is good practice to keep the number of images per folder low (hundred, not thousands).

I’m not talking about the first time scan, I’m talking about the ones after. Every time I open the camera directory it counts up through every single file which is completely pointless as there are no changes for it to find. With lightroom, you tell it when files have been added and then it scans through the, it’s done this for as long as I can remember and it has no issues with large photo libraries, nor does ON1 or Capture One after testing them on the same libraries.

The buzzword here is “find”. How can PhotoLab know that nothing has changed?

Other than e.g. Lightroom and its explicit import feature, PhotoLab ingests images as they come along. There is no means to make DPL stop looking for new files in any folder you point it to. This is how DPL was designed and many users of this forum welcome that DPL works as is. Not me though, I prefer explicit import.

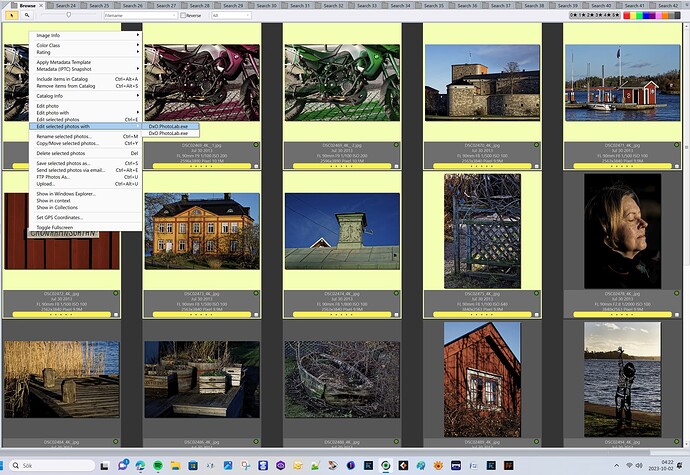

Photolab us scaling far better than both LR and CO together with external applications like Photo Mechanic. If you don’t want another external library it also works with a lot of Viewers to open a set of images of your liking with Photolab.

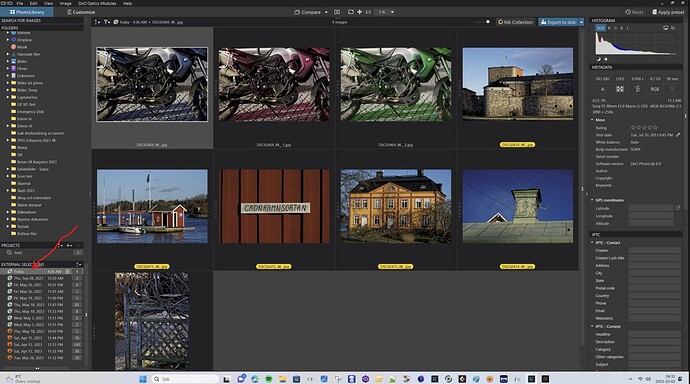

It is not necessary at all to open a folder with Photolab, you can do it with any viewer of your liking too and not everything in a folder always necessarily. You decide! These batches of yours are then accessiible anytime via the record the Image Library saves in Photolab as External Searches.

Why do you say that Photolab scales better than Lightroom or Capture One? Photolab is excessively slow on first startup and it’s still painfully slow on each startup, each folder has to be indexed every time you use them whereas Lightroom’s import is far quicker and afterwards the photos are available instantly, even in large photo collections. Being able to disable this re-indexing when it’s not even required much of the time would be a huge improvement.

Using an external editor is not a viable solution as I take large batches of photos then work through them in the editor, have a close look, try some edits and decide on which photos I want to export so having to keep moving between software is just clumsy.

Well @Johnmcl7, if you use Photolab together with PhotoMechanic you don´t need to let Photolab necessarily open a library with thousand images at all the first time. Say you really have 1000 images you will cull them with PM first and then you can very easily open say 200 images at the time in PM, select them and open them in any number in Photolab say 200 at time. In PM it doesn´t really matter how many they are.

If you have both PL and PM open at the same time it is very quick to switch between the applications with Alt-Tab. In Picture Library mode in PL you can anytime go back to a set of images in PL through the “External Selections”. That way you will never feel PL is setting the pace in your workflow like when you are totally dependant on just PL.

When I was using Lightroom (which I started to use 2007 and quite a few years) the only way to increase the responsiveness then was to decrease the size of the previews. That was a recommendation by Scott Kelby himself the Lightroom Guru No 1? that has written quite a few books about using LR. With PM, PL is never limited to decreasing the size of the previews in order to cope with performance problems that Lightroom is still having to some extent. That is scaling and a productivity it was hard to get with LR.

With LR I tried to handle XMP-metadata but gave up because it just was too inefficient.

If we read what just Scott Kelby is writing about using Lightroom or Adobe Camera RAW for that matter as a sports photographer we all understand why. No sports photographer uses Lightroom, because the productivity and effectiveness are just not there. This example is what scaling really means is in this world of software:

My Sports Photography Workflow (so far) - Scott Kelby’s Photoshop Insider

Because Lightroom and Photolab used the same way as Lightroom as a monolithic solution “for it all” is bound to be a big compromise where there has to be a trade-off between the initial preview image quality and the speed when handling the assets of your image library it will never be as productive and fast as a solution with two integrated specialized applications. With PL + PM you will both get high resolution previews in the RAW-converter and a blistering fast image library. That is the first expression of scaling here.

The second is the metadata management efficiency with PM, unlike Lightroom solely optimised for super-efficient XMP-metadata management. The third expression is the possibility to use as many image catalogs you wish and perform the joint searches you like over as many catalogs at the time in any combination like. Compared to that both Lightroom or just Photolab are very dull knives.

So, professionals and/or people who value their time prefer to spend a couple of houndred dollars extra on a lifelong license for a specialized super optimized tool for culling and metadata- and asset management instead of using a compromise of “trying to do it all”-solution with the limitations such solutions always seem to have.

At least Photolab since version 6 seems to be a bit more migration ready than before since it now is the XMP-files that really owns the metadata (or the JPEG-, TIFF and DNG-files for that matter if they happen to be your masterfiles) Photolab is always “disaster ready”. Lightroom as I remember it wasn´t because LR save all that data in the catalog-database. In the Lightroom case exporting the XMP-data was an active action by the user and if you had a single point of failure crash and had forgotten to secure your life saving XMP-export on a regular basis you were “toasted” - which has happened not just for me in those earlier Lightroom days.

When using either Photolab or PhotoMechanic as a metadata owner, that cannot happen since they are not single point of failures in that respect. In Photolab both the DOP-files and the XMP-files secure both the edit-metadata and the XMP-metadata integrity and prevent “single point of failure disasters” that really can happen still with Lightroom to my knowledge. That is also a fact that improves future security by not being exposed to “single point failure”. Both Photolab and PhotoMechanic distributes risk to a higher degree than a single point of failure solution like Lightroom. Even i Capture One you have on option to better risk management because there you can skip the database all together using “sessions” instead which also is spreading the risk.

The good thing with Photolab is that you have a real scalability option that some people already are been using for many years. The reason why that has evolved is because DXO Optics Pro and Photolab was lacking an asset management solution for so long.

The case of Lightroom is pretty different. Many Lightroom users are still convinced they already live in the best of computer software worlds so they haven´t really looked elsewhere for asset management solutions.

Kelby’s paper is nicely done and clarifies that he is not concerned with editing images, but with selecting a bunch to be handed over to a client in the shortest time possible. Such is the world of sports/news photography…which is not the world that DxO is designing its applications for.

Not being a sports/news photographer doesn’t mean that metadata is irrelevant though, and it does not mean at all that reliability is irrelevant either. DxO has accepted that keywording is relevant for many users, has added geotagging too, but has neglected dealing with reliability for a long time. I’m happy to see that PhotoLab now starts to have such features and I suppose that we’ll see, over the next few releases, some progress. But I’ll not hold my breath.

I don’t think you understand the terms you are using, if you need an additional application to handle more files then by your own definition Photolab does not scale well with larger numbers of files.

Most of what you’ve typed is wrong and it’s not remotely helpful, I will explain the problem with Photolab in a single sentence - it takes up to half an hour after the first time read from Photolab to open a folder and it opens instantly in Lightroom. I don’t care what Scott Kelby thinks about Lightroom or what he uses, the question I asked is can I stop Photolab re-indexing my photos every time and being painfully slow to use and it seems the answer is it can’t. I am still on the old standalone version of Lightroom as I refuse to pay a subscription and it doesn’t have the issues Scott Kelby describes, I’ve just been at a bike race and took over 5,000 photos with Lightroom imported with ease and I could work through them without issue. I’m not a sports photographer nor a professional so if LR can handle that then that’s good for me and it certainly does it far better than Photolab.

I have used Lightroom for many years and for many photos and in that time I have never had a single issue with its catalogues because I have a proper backup solution. I would much rather have a workable option than that as opposed to spending hours watching Photolab appearing to have crashed and trying to track what it’s doing through task manager.

Unless you have an answer to stop Photolab being so slow to open that doesn’t involve having to buy additional applications, your replies are of no benefit.

@Johnmcl7 you have discovered one of the features that attracted me to DxPL in the first place because I disliked the need for an explicit selection of images to be imported before LightRoom (trial copy only, I never chose to purchase the product) would allow me to start editing, that was many years ago!

DxPL implicitly imports as you browse which does mean that you shouldn’t idly browse image directories in DxPL because they will all wind up in the database.

When working on a new shoot then the current DxPL architecture comes into it own (except perhaps with very large numbers of images) but when re-visiting previously edited directories then the constant rendering, re-rendering, re-re-rendering etc, can begin to grate.

It consumes user time, machine resources and power but it ensures that the user can see the current state of the edits without having to export, at least up to a point!

That “point” is the fact that certain elements will not be rendered until the image is viewed at 75% magnification or more and even at those magnifications ‘DeepPrime’ and ‘DeepPrime XD’ (and ‘Prime’ I believe’) will only ever be rendered in the small ‘DxO DeNoising’ window!

So unless DxO can be persuaded to provide a facility to allow the user to control automatic importation and automatic rendering @Musashi then users must accommodate the current architecture with bigger CPUs for rendering (the GPU is essentially only used for DP and DP XD exporting) and bigger electricity bills!

@Stenis was suggesting using additional software to manage (smaller) batches of images submitted to DxPL as a suitable work flow (workaround).

I created a BULK test of 11,000 images some time ago and it takes a while to load those images and then back over those loaded (into the database) images and apply the default presets and render them for review and every time you view the images or try to locate an image that rendering and re-rendering will take place hence, DxPL needs to add to the simplicity of its approach to allow users to be able to control the processes of importation and rendering @Musashi.

With such controls in place it then becomes possible to add an additional ‘Render’ command to overcome the limitations of the 75% magnification initially and then a further ‘Render’ option to include full inline noise reduction, if the user has the time or hardware to support such options.

But in the meantime we have what we have!

When I look at both Lightroom and PhotoLab, they do the same thing when direct them to a folder of images.

First encounter with folder/files

- Read all files and copy metadata to a catalog/database

- Create previews based on what I set as default presets

→ Lightroom can be told to not do this automatically

→ PhotoLab just does it, no matter if that folder contains tens or thousands of files, sucking up processing resources and making DPL almost unresponsive to user input

Revisiting folder/files

- Check all files for metadata changed by other apps, add a badge if MD was changed

- Check physical presence of files and display a badge or question mark to a stand-in

As we can see, both apps do the same, but the difference is in how the do it

- Lightroom can be told to not create previews upon and manages processing resources in a way that doesn’t affect responding to user input

- PhotoLab does its thing as if it were the only thing that existed

Imagine PhotoLab adopting ways to a) hold rendering and b) controllable resource consumption…and we’d be free’d of at least two of its annoyances.

Explicit or implicit import?

- I prefer explicit import…and it would help PhotoLab to avoid

getting stuck to (re)discovery like a fly on flypaper. - BTW: PhotoLab can do explicit imports through its “index a folder” feature, which, sadly, cannot be used to fix the database yet.

Answer is you can’t.

This is one of the 2 most fastidious bottleneck in photolab.

Wouldn’t a cache for every already done render solve this issue (without consideration for storage space - I think getting a smoother experience here would be well worth the cost of additional SSD storage space), or reading those cache would need too much time too ?

Scaling in this respect means that the solution scales and that you have an option to get the best of two worlds really: Photolab has for quite some time been the converter giving the best image quality and through the integration with PhotoMechanic we get a real “power couple” that scales beyond what any other option can offer when it comes to image quality and asset management with superior metadata management. This combo takes us beyond what any integrated monolithic application like Lightroom, Capture One or for that matter Photolab can offer.

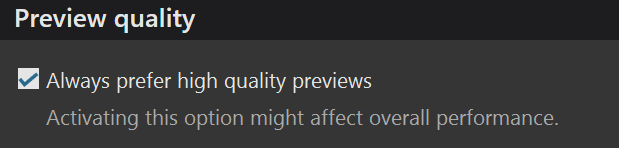

The reason this is possible to achieve is just that you use two different applications that in this setup doesn´t need to suffer from the type of performance issues and compromises all integrated converters/Picture Libraries suffers from. The compromise is speed vs preview resolution. PhotoMechanic never needs to render images on the fly after the initial preview ingestion and indexing. It can handle millions of images without experiencing performance problems. If you control the number of images in the batches you open with Photolab at the time you will not experience any performance issues despite using the setting in Photolab that always will give you “high quality” previews without having to pay the performance penalty the “Preference - Preview quality”-meny is warning you about.

Instead of a sometimes mediocre monolithic “doing it all” solution relying on PictureLibrary for the asset management, we just use Photolab to what it does best and add probably the best asset management there is to take care of the rest. For most people Photolab really is fine but there are users that need more efficient tools for example to solve their needs of more efficient metadata maintenance than either Capture One, Photolab PhotoLibrary or Lightroom can offer. I think PhotoMechanic Plus is very cheap compared to what it will give you. It will just take a few hours professional use to pay for the 329U$ it costs today when used professionally. That much time it really saves.

I bought mine version 6 for 220$ that still after three years is the current version, since version upgraders are not all that common but there have been numerous maintenance upgrades during these three years for free. So, if even you are into serious metadata maintenance work just privately, it´s worth to consider PM Plus for all users that values their time.

I’m going through a trial of PL and current LR, after having used LR 5.7 for about a decade. I also used Photo Mechanic many moons ago when I did this as a business, mostly sports. I still mostly shoot sports events and with modern mirrorless cameras, that means I almost always have several thousand images to process from a shoot.

I have always found LR to be painfully slow at importing and creating previews. And to not wait for it to do this, you end up having constant delays as you do an initial pass through the images. LR has thus far seemed quicker to me at this and I can usually cull through things with pretty good speed, looking at images full screen. Maybe I just haven’t run into the things that are being reported in this thread yet.

I would like to use either LR or PL without resorting to Photo Mechanic as another product. One appealing thing to me in terms of speed is that I’m finding that I can cull pretty quickly in the camera and give an initial star rating to the best images. Upon initial load of the images in PL, I can filter on this rating and I’ve immediately eliminated a lot of images I don’t even need to look at. Is it still processing these behind the scenes? Sounds like it is but I’m not seeing a performance hit. In contrast, there is no apparent way to import based on star ratings or any other criteria, so if I have 3,000 images from a shoot but have only started 300, I’m still waiting for LR to import the whole thing unless I have something like Photo Mechanic to preprocess and put them in separate folders. I’m not currently creating separate folders, I’m just relying on the ratings and picked/unpicked metadata to sort through the images in the folder. I seriously need to get to the point where I physically delete the ones that have no value but I’m always jus kept everything so its a hard habit to break.

Being able to disable this re-indexing when it’s not even required much of the time would be a huge improvement.

Yes. Someone mentioned that many prefer the way PL works, and that’s fine, but providing a simple option to allow for alternative workflows by simply opening whatever image is passed to it would be very welcome.

I don’t have a need for complex indexing and I organize my images into directories on a file server which is duplicated in the cloud for backup. Many times I have images organized in such a way that there are 1000 or so in one directory. I can browse them quickly in the OS’s file manager or other organizational tools and send them individually to PL for processing, but then PL insists it also needs to be your file manager and indexing tool, so things slow down while those 1000 images are unnecessarily (in my case) loaded and indexed by PL. If I want to prevent this, I need to add an extra step to copy the files I want to process into a temporary empty directory so PL doesn’t index and load everything.

I like PL and prefer it to tools like LR because of its features and higher quality output, and it’s great that some folks like it’s built-in indexing and file management feature, but it would be nice if it also offered a simple option to operate as a tool in a pipeline and be more workflow-agnostic.

Though it is not disputed that PL is slow in loading a folder it is not to blame for instigating that action. It depends on what is being sent to it.

Some image viewing software and Windows File Manager will send an entire folder to PL when selected image/s are your intention. Whereas others (e.g. Fast Raw Viewer and XnViewMP) will just send those images.

Regardless of that knowledge as I do use the above bracketed software, I still favour a small folder (“Photos In” in my case) which I use for small portions of work in progress.

I do however, occasionally edit old Files which may be in large folders. I would never browse to that folder in PL as that would take ages to load, so I send them from the above softwares and I can immediately get on with editing.

Exactly, it is much better to use for example XnView to open just selected images and not the whole folder in Photolab. XnView is really also for free if you don´t want to contribute, so there isn´t even any opening for complaints about expensive extra software. The 29 Euro, they ask you kindly to contribute with, I think is a low price for what you get. Personally, I love it and have a lot of use for it. It is indispensable for me since it has a really strong support for metadata display it is hard to get anywhere else (EXIF, ITPC, XMP and GPS but not as good for editing though).

Since Photolab also saves all external searches in the “External Searches” list it is also ver,y easy to go back to searches one has done and even turn them into “Projects” in Photolab with just these selected images.

So, there is really very little sense today complaining about how slow Photolab is opening an image folder. With XnView or some other viewer with an “Edit with …” -function there is no need at all to open a full folder with Photolab if you do not choose to do so anyway - but then it will be an active choise despite you know there often are better alternatives.