I believe it will come more and more. There are also e-ink displays, that can run on battery for one year: https://inkposter.com/

Although not in hdr yet.

PL can work in a very large colourspace which encompasses all data produced by all cameras tested by DxO.

Now if you export your photo to a format like TIF 16bit in an HDR colourspace like rec2020 you will have an HDR image.

I am open to be corrected, but I believe this should be what you want. You may not see the HDR image but the data is still there and will be visible when shown on an HDR display.

Investigate colour management in video editing software like DaVinci Resolve which has been doing this stuff for years and it can produce HDR video while editing on an SDR display.

It seems to me that “HDR” has become marketing speak for “wow” or “super” or “the latest and greatest”.

But let’s look at what it actually means, rather than what folks try to make it mean.

The term “dynamic range” is a measure of the distance between the darkest thing a sensor can record and the brightest thing a sensor can record.

The human eye is reckoned to be able to record around 20 stops range. In other words, the brightest level that can be viewed and at which we can discern detail is just over one million (1,048,576) times brighter than the darkest level. If we try to view an extremely bright subject, the eye and brain will adapt what it can perceive so that we are unable to view detail in darker parts of the scene (and vice versa). So, at any given moment in time, the human eye can only process a “slice” of the available light range.

But what the human eye sees is highly and rapidly processed by the brain, which is capable of “stitching” different parts of a scene so that what we remember is seeing “everything” at the same time. Similarly, this is how we see everything as sharp - the brain does a “focus stack” without us even realising it.

Since we want to see everything, from black to white, we can say that the range is actually one step - from black to white.

But, in recording that “step”, we want to be able to discern all the subtleties of tonality between black and white and this is where we have to resort, for digital cameras, to defining how many bits it takes to discriminate and render those intermediate tones.

Not forgetting that we only have two limits to the range, black and white, or 0 and 1.

In actual fact, all you need to record an image that contains just black and white is 1 bit. But what we want is to be able to render smooth graduations between all the various levels between.

If we use 8 bits, then what we are doing is dividing up that one step from black to white into 256 different levels, which can give posterisation on some long graduations like clear skies.

If we use 16 bits, then we are dividing that same one step into 65,536 different levels.

If we use 32 bits, then we are dividing that same one step into 4,294,967,296 different levels.

But, how ever many possible intermediate tones we want to record, the human eye is reckoned to be limited to around 10,000,000 steps, but that is for a colour image. This equates to around 24 bits and is why, with RGB pixels, the camera only needs to record 8 bits per colour to exceed the level of detail perceived by the human eye.

Now, my Nikon D850 is stated to record RAW images at 14 bits per channel, which means 16,384 different levels of each of the three colours.

But, still, we are talking about the number of steps between black and white. The range is still from black to white, from 0 to 1.

The links the phrase “32 bit” with “HDR” has absolutely nothing to do with range and everything to do with the smoothness of transition between two levels.

My Nikon D850 is capable of a dynamic range, from darkest recordable detail to brightest recordable detail, in a single shot, of 14.6 stops. But here we are talking about a range of light, not how many bits it takes to record intermediate detail.

Apart from marketing kidology, how many steps between darkest and lightest is not directly related to how many stops. But, if it were, you definitely don’t need 32 bits to cope with the 24,833 possible steps between black and white that a 14.6 stop image contains.

So, in summary, assuming one bit per stop, what is the point in using a 32 bit workflow to process a 14.6 bit image?

The “inkpaper” gizmo seems to described as “like paper”, which means reflective, not transmissive, which is normally accepted to be limited to 8 stops, so I don’t see how it can possibly cope with 32 bit or “HDR” images, which seem to rely on backlighting.

Let me add tot that. HDR is simulating a higher dynamic range then the output device can show.

Or the input device.

George

So, you are saying that HDR is showing blacker than black and whiter than white? ![]()

No. It’s showing black more detailed. Like a part of the image is exposed for the blacks and another part of the image is exposed for the whites. That’s what you are doing when creating a HDR image.

Look at the image in this thread Recovering blown highlights - is there such a thing as a correct way? - #10 by Wolfgang. The only way to capture this image would have been with HDR, one image exposing for the light area, 1 image for the dark area and eventual several between. After merging you’ve an image with a much wider dynamic range as the sensor could capture. But still shown on an output device with lower dynamic range.

George

An example of ‘true’ HDR that’s not a computer running all the time is a recent TV monitor (e.g., OLED). (It does have to be on, of course.) The difference between that and SDR is mostly (much) brighter highlights. It’s noticeable in TV shows on Amazon Prime and other streaming sources. Admittedly it’s not used much for still images (yet).

So after your theory, there are no blown out highlights, because the camera will always record the brightest values as 1? I think something is flawed here, it is not only about the intermediate steps, but also about a darker darkest tone and a brighter brightest tone.

Personally I would also be happy with prints, but there is no denial that there is technological advancement and that current software should be up to date with these advancements. Otherwise we can just stick with film.

This is exactly what HDR is supposed to do because it simulates what the human eye is actually doing all the time. So HDR is trying to emulate what the eye sees.

No camera can capture the full dynamic range from the darkest blacks to the brightest whites while keeping all the detail in-between. The human eye cannot do that either (you have a pupil that opens and closes just like a lens aperture).

An HDR image merges multiple exposures together to generate an image with all the detail from dark to light that cannot be captured with a single image, just like the eye does automatically for you as you look at different parts of a very high contrast scene.

These comments seem to be about traditional or classic HDR images tone mapped to regular SDR displays. The OP, @maderafunk, and @jch2103 are talking about something related, but quite different (see link below).

In his article he has an example, an image made in Nepal and a dynamic range of 15 stops. Where did he get that from? What is the dynamic range of an image sec? Without editing it can’t be more as the camera’s ability. At the high level it’s bounded by the maximum of 255, the clipping. On the low side one can simulate a higher dynamic range by making the darker sides more detailed, vissible.

George

Well, this has certainly been my experience. If I can make images like these…

… from single SOOC RAW files like these…

… why would I ever need to combine multiple shots?

So, if I can already produce images like the above, which show the full 14.6 stops range of my sensor, in PL, I repeat my question - what is missing from PL?

Nothing. Except when dealing with more extreme images. Your highlights are determined by the lamps. That would be different if it was the sun.

George

I’m still not sure but is "hdr merge"meant in the title? I can’t find anything like “hdr marge”.

George

One of the first things you should learn about photography is to never include the naked sun in the image. Why? because it can burn a hole in your sensor or, worse, your eye.

I found this article which shows the kind of damage photographing the sun can do.

We just had a partial solar eclipse and it took a 16 stop ND filter for me to be able to (relatively) safely glimpse the sun with the naked eye, but only for a matter of a second or so.

Then there’s the question of how “HDR” you want your image to look.

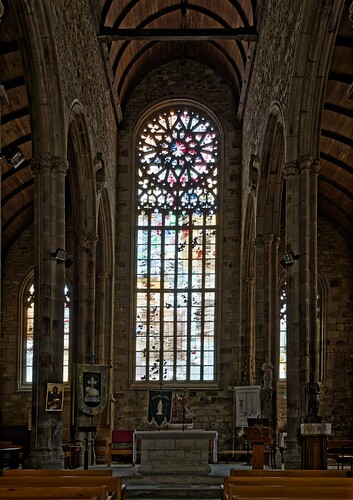

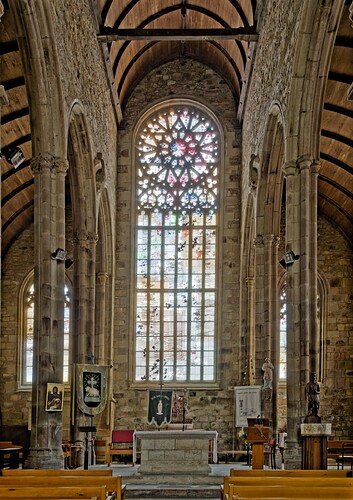

You can have something like this…

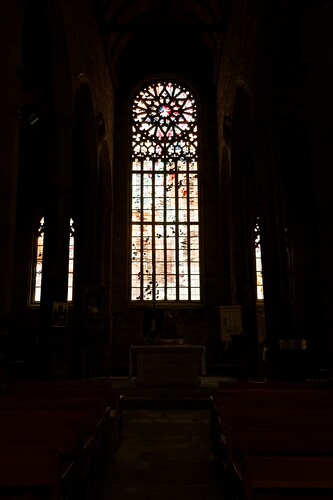

… which shows everything down into the shadows as being about the same luminosity. Or you can have my original preference…

… which shows what it was like to be in the church, which was very poorly lit inside, with the sombre feeling that I experienced.

With a DSLR you look at a projection on mat glas, you don’t look in the sun straight. Exposure of the sensor is just a short time.

I don’t know with system camera’s concerning the sensor.

But I see and have seen a lot of images with the sun included.

I think HDR is meant for those circumstances where the light is to much, like shooting against the sun by example. In your pictures that’s not the problem.

George

Yes, that title is bonkers. I think the OP is just wondering if DxO PL, ala LR, will add a new feature for HDR displays.

From what I’ve read a HDR display are brighter as normal displays. The colors or color range are the same. What does a function in PL or LR add?

George

Good question. Maybe because my Apple displays are P3, or at least that’s what I was told, but I couldn’t see any difference with the images in the Adobe blog article.

My 28" Asus sRGB monitor is capable of HRD and to be honest, having tried it. I struggle to see the difference. Maybe it’s my old eyes. My aRGB EIZO 2740 monitor. I have no idea if that does HRD as having tried it once I’m not interested in finding out. I only use my EIZO monitor for adjusting my photographs ready for printing, and I am pretty certain prints are not capable of HRD. It might be able to print the detail of multiple images, but I very much doubt you would be able to reproduce the brightness. My prints are always slightly down the brightness compared to my aRGB monitor.

I can appreciate that some people will want to view the images on HRD monitors. Not being interested in HRD. I still suspect that Photolab is quite capable of reproducing the same range. I stand to be corrected on that one.