@John-M The implication is that AI will replace old fashioned developers and that may be partially true but a tale from the past might (or might not) be appropriate

I was the UK technical lead for the implementation/installation of a Voice Mail system with a UK mobile start-up.

The product had been written for PacBell, a fixed wire system, and added to their US exchange system where the “Message Waiting icon” was a light on the handset turned on by the exchange. Not exactly cutting edge and it wasn’t going to work with mobile handsets, so the MWNS (Message Waiting Notification System) was born and it fell to me to design that sub-system and that design went to the UK development team to be written.

The individual who set about writing it decided he would try to program a strictly “go to less” COBOL program, with nested IFs to a depth that was truly ridiculous and our Systems Software development group had changed the working of DMS II to handle new structure types.

The result was a program that attempted to handle ‘Abort Transaction’ Exceptions X,levels deep (as X tends to a large finite number) in the program logic, i.e. it was never going to work!

With the delivery deadline approaching we needed a solution so “muggins” (me) had to rewrite, sorry write, the product from scratch with less than 3 months to delivery.

It turned out to be 50,000 lines of COBOL, I had the advantage that I knew the design intimately and had written a library of error handling code for another customer that had been in use for years and obviously we … didn’t make the deadline! The first version hit the customers Test system less than 3 weeks from handover and failed the first tests.

We were actually about three weeks late with the delivery.

So the lessons to be learned are

- If you want a job done then do it yourself, which becomes eminently possible with help of AI.

- AI is only as good as the models on which it is based (trained), what would have happened if it was based on the code of the original coder on my project!?

- AI needs to be kept up-to-date which even I wasn’t with respect to the implications of the changes to record and structure locking on the new DMSII release.

The development test system was being used by multiple programmers at the same time and they were creating structure locks all over the place and you can’t handle an ‘Abort Transaction’ exception successfully when buried deep in the logic of a program, i.e. you need to exit to a higher level in the program, reset any fields that had been changed by attempting to process the current (failed) transaction and start the transaction again, i.e. you build the program to expect such occurrences.

On the live system my MWNS reported such occurrences (a very rare occurrence on the operational system) and handled them correctly but they were being caused by the main application, written in LINC) (which generated COBOL) which simply hung and was almost certainly holding on to Locks when it shouldn’t have.

I am currently using ChatGPT to write utilities for my use with PureBasic. Some of the program work 1st. time or after minor mods, but the the AI model suggests more features and things can start to go awry the deeper we go

So I entered the following request to ChatGPT

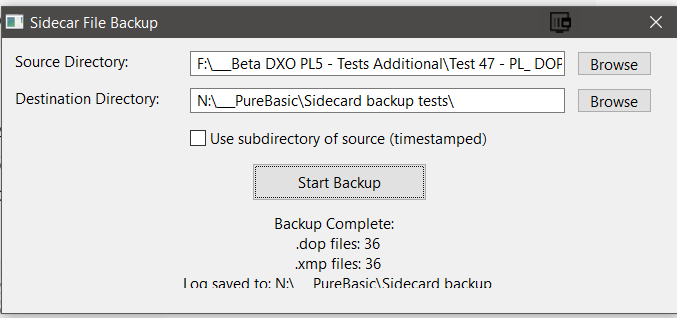

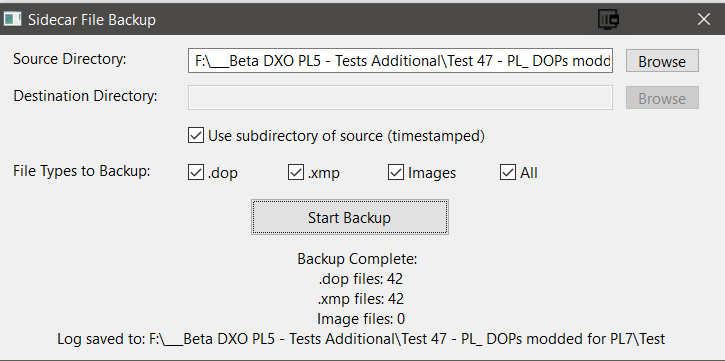

“Please write a PureBasic program to backup .dop and .xmp sidecar files from a user selected directory to a user selected location which is either a subdirectory of the directory being backed up or one selected by the user. Please output details containing the directory backed up and the backup location and counts of the number of .DOP files and the number of ,xmp files backed up. When one backup operation is completed please prompt the user to either select another directory for backing up or terminate.”

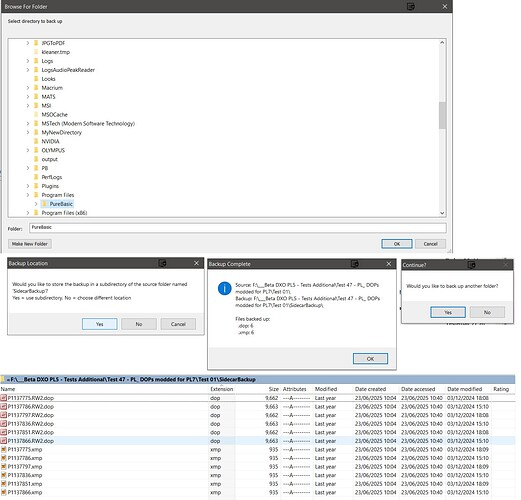

The first copy failed with a syntax error, ChatGPT seems to get confused with the dialects of Basic that exist but the amended version worked fine, albeit without a proper GUI.

So you get

The next enhancement using the existing UI was to date/timestamp the backups and then ChatGPT suggested

" Let me know if you’d like to:

- Include a timestamped subfolder even for custom destination,

- Add log files inside the backup directory,

- Or do recursive backups including subdirectories.

Happy to help with all of that!"

and it had previously suggested

" Let me know if you’d like:

- Recursive subdirectory traversal

- Logging to a file

- Progress bars or GUI version

I’m happy to extend it!"

So friendly and I will ask it(!?) to add the logging first to the current version and then ask for a GUI version!